Data scientist’s primer to analysis of mass cytometry data

Contributors: Lars Rønn Olsen, Christina Bligaard Pedersen, Mike Leipold, and Holden Maecker

1 Preamble

1.1 The purpose of this site

The aim of this site is to introduce cytometry data - specifically mass cytometry data - to data scientists and bioinformaticians not familiar with the technology, the unique challenges relating to the technical and biological origins of the data. The content will be updated periodically as new tools and methods are published.

Along with discussions, we have included code for common processing and analysis tasks for R. Flow cytometry as a scientific discipline has been around for half a century at this point, and a plethora of software and data analysis packages has been created using a multitude of different programming languages. Common to the majority of them, is the heavy focus on the use of The Flow Cytometry Standard (FCS) format. While this format certainly has its strengths, it (and the analysis software developed for it) can seem somewhat convoluted to the uninitiated. Rather than attempting to update these packages for mass cytometry or introduce workarounds, we here focus on extracting and working with the raw expression matrices that most bioinformaticians know from other data types - such as for example transcriptomics (probe and sequence-based alike). The rationale is that once a bioinformatician has an expression matrix and a working understanding of the technical and biological idiosyncracies of the data, most, if not all, common analysis algorithms for expression data can be adapted and applied.

We will also present a selection of common analysis tools. Not with the explicit purpose of applying them in their packaged form, but to explain what goes on under the hood to enable these useful analyses to be applied in a more flexible and customizable way. The main aim here is enabling a high level of autonomy for data scientists working with mass cytometry data by dissecting both the data and common algorithms to its core workable components.

The site consists of a series of code snippets for preparing and analyzing the data. The examples are based on two different datasets: One is a dataset of 21 healthy control individuals (described below and available from FlowRepository), and the other is a case/control set from Bodenmiller et al. (2012), which contains 16 samples in total.

1.2 The example data sets

1.2.1 Healthy donor samples

21 samples of PBMC from healthy control individuals, stained with the Leipold and Maecker 2015 protocol, mixed with EQ normalization beads (Fluidigm Corp), and acquired on a Fluidigm CyTOF Helios mass cytometer using default acquisition parameters. The data exists in two forms: 1) the raw FCS files as generated by the Helios, 2) the samples normalized using the MATLAB method (described below) - these are files with “normalized” in the file name. Each row in the expression matrix is an event, of which we are only interested in those that represent live, intact, singlet cells (i.e. we want to filter out beads, dead cells, doublets, debris, etc.). Each column contains information about the cells - some are parameters from the data collection by the instrument (e.g. event length, time, etc.), some are markers primarily used for pre-gating (DNA stain, live/dead stain, beads, etc.), some are lineage markers (needed to determine the cell type), and some are activation or cell state makers. Here is a table with descriptions of each column in the example expression files.

| Isotope | Parameter | Description |

|---|---|---|

| Ce140Di | Bead | Normalization parameter isotope unique to Fluidigm EQ normalization beads; used to distinguish them from cells during gating |

| In115Di | Dead | Pre-gating parameter Maleimide-DOTA small molecule as live-dead (cannot efficiently enter cells with undisturbed membranes) |

| Ir191Di | DNA1 | Pre-gating parameter DNA intercalator (intact cells have high levels) |

| Ir193Di | DNA2 | Pre-gating parameter DNA intercalator (intact cells have high levels) |

| Nd142Di | CD19 | Lineage marker B cell marker |

| Nd146Di | IgD | Lineage marker B cell marker |

| Dy164Di | CD20 | Lineage marker B cell marker |

| Nd143Di | CD4 | Lineage marker T cell marker |

| Nd144Di | CD8 | Lineage marker T cell marker |

| Nd148Di | CD11c | Lineage marker used to gate mDCs |

| Sm149Di | CD16 | Lineage marker used mostly to gate NK cells |

| Nd150Di | CD3 | Lineage marker used to gate T cells |

| Sm154Di | CD14 | Lineage marker used to gate Monocytes |

| Gd155Di | CCR6 | Lineage marker used to gate Th1/Th2/Th17 cells |

| Gd158Di | CXCR5 | Lineage marker used to gate T follicular helper cells |

| Tb159Di | CXCR3 | Lineage marker used to gate Th1/Th2/Th17 cells |

| Gd160Di | CCR7 | Lineage marker used to gate Naive/Central memory/Effector memory/Effector T cells |

| Dy162Di | CD45RA | Lineage marker used to gate Naive/Central memory/Effector memory/Effector T cells |

| Ho165Di | CD127 | Lineage marker used to gate Tregs |

| Er166Di | CD33 | Lineage marker used to gate Monocytes |

| Er168Di | CD24 | Lineage marker used to gate Transitional B cells |

| Yb171Di | TCRgd | Lineage marker used to gate gamma-delta T cells |

| Yb173Di | CD123 | Lineage marker used to gate basophils and pDCs |

| Yb174Di | CD56 | Lineage marker used to gate NK cells and NKT cells |

| Yb176Di | CD25 | Lineage marker used to gate Tregs |

| Lu175Di | HLADR | General protein marker activation marker on NK and T cells |

| In113Di | CD57 | General protein marker differentiation marker found on CD8+ T cells and NK cells |

| Yb172Di | PD-1 | General protein marker found on T cells, modulates immune function |

| Tm169Di | ICOS | General protein marker found on T cells, modulates immune function |

| Er170Di | CD161 | General protein marker marker of IL17 producing T cells |

| Er167Di | CD28 | General protein marker found on T cells, necessary for immune activation |

| Gd156Di | CD94 | General protein marker found on CD8+ T cells and NK cells, modulates immune function |

| Gd157Di | CD86 | General protein marker found on monocytes, necessary for immune activation and survival |

| Eu151Di | CD38 | General protein marker found on T cells, B cells, and NK cells, necessary for immune function |

| Sm152Di | CD27 | General protein marker found on B and T cells, involved in modulation and activation of immune function |

| Eu153Di | CD11b | General protein marker found on cell of the innate immune system |

| Sm147Di | CD85j | General protein marker found on T and NK cells, regulates immune activation (inhibitory receptor) |

| BCKG190Di | 190BCKG | Background not used in gating |

| Pb208Di | 208Pb | Background not used in gating |

| Sn120Di | 120Sn | Background not used in gating |

| I127Di | 127I | Background not used in gating |

| Xe131Di | 131Xe | Background not used in gating |

We will use this dataset for demonstration of the basic FCS file handling and pre-processing steps as well as to demonstrate many of the tools, but for speed and ease, we will download and process 2/21 samples. For this, one needs to log into FlowRepository and get the files from the experiment FR-FCM-ZYAJ. We will use “081216-Mike-HIMC ctrls-001_01_normalized.fcs” and “081216-Mike-HIMC ctrls-1213_01_normalized.fcs” and rename these to pbmc_healthy_1.fcs and pbmc_health_2.fcs.

An R data object with the pre-processed healthy control samples (normalized, pre-gated, transformed - the entire process is detailed below) can be downloaded here if you are mostly interested in post-processing analyses. If so, you may skip to Data analysis section.

1.2.2 Case/control samples

To exemplify analysis of differential abundance, we also use a case/control dataset. We here use the data described by Bodenmiller et al. (2012), which consists of 8 samples of PBMC responding to B-cell receptor (BCR)/Fc receptor (FCR) cross-linking and 8 control PBMC samples. The data is included in the HDCytoData R package and can be loaded directly in R. Alternatively, the raw data can be downloaded here (download the files with “reference” and “BCR-XL” in the filename). These data are pregated and transformed.

1.3 Installing the R packages you will need

We use a variety of packages for the analyses below. In case you are missing some of them, here’s what you will need:

## From CRAN

cran_packages <- c("scales", "ggplot2", "dplyr", "ggridges", "RColorBrewer", "MASS", "Rtsne", "kohonen", "miscTools", "gplots", "Radviz", "igraph", "statmod", "devtools", "uwot", "cowplot", "limma", "matrixcalc", "plotrix", "JPEN", "VCA")

#install.packages()

## From BioConductor

bioc_packages <- c("flowCore", "ConsensusClusterPlus", "cydar", "CATALYST", "ncdfFlow", "edgeR", "HDCytoData")

# if (!requireNamespace("BiocManager", quietly = TRUE))

# install.packages("BiocManager")

# BiocManager::install()

## From source

github_packages <- c("nolanlab/cytofCore", "JinmiaoChenLab/cytofkit2", "cytolab/mem", "biosurf/cyCombine")

#devtools::install_github()The R session info is located at the bottom of the page.

2 The structure of data from the CyTOF system

The following sections include lots of code snippets you can use in your CyTOF workflow.

2.1 The Flow Cytometry Standard (FCS) format

The FCS format is a binary data file standard originally developed for storage of flow cytometry data. As well as the ion count matrix, it has slots for experiment information, channel information, etc. Traditionally, ion counts have been analyzed visually using FlowJo - a commercial software platform for analysis of single-cell cytometry experiments. FlowJo is largely GUI-based and requires a license for both academic and non-academic users. For more automated analysis, the following tools for R can be used to import, manipulate and convert FCS files - or extract the ion counts for downstream analysis in R or other programming environments.

- flowCore: facilitates many FCS file related functions. Includes the option to write new FCS files.

- flowStats: package for normalizing, gating, and basic and advanced analyses of flow cytometry data.

- cytofCore: contains a function to merge FCS files from disrupted CyTOF runs.

- CATALYST: contains functions for normalization, debarcoding, and quality control.

Example of basic FCS file handling:

# Load the flowCore package

library(flowCore)

# Read a single fcs file - remember to set the directory if you changed it since downloading the files

fcs_files <- list.files(path = '.', pattern='.fcs$', full=TRUE, ignore.case = TRUE)

fcs <- read.FCS(filename=fcs_files[1], transformation=FALSE, truncate_max_range = FALSE)

# Extract expression matrix

exprs <- fcs@exprs

# Explore the channels (columns in the expression matrix)

fcs@parameters@data

# Make colnames human readable using information in the parameter data slot

markers <- gsub(pattern = ".*_", replacement = "", x = as.vector(fcs@parameters@data$desc))

colnames(exprs)[which(!is.na(markers))] <- markers[which(!is.na(markers))]

# Merging fcs files (in case of interrupted runs)

# library(cytofCore)

# cytofCore.concatenateDirectoryFiles(inputDir="./", outputDir=".", pattern=NULL, overwrite=F, timeParam="time")2.2 Parameter harmonization

Note that these channels are freetext editable in the Fluidigm CyTOF software. This means that if you are dealing with files from multiple different runs from different instruments and/or different operators (or in some cases even different staining panels), you will need to harmonize your channel names to compare samples. The mass information (in the “name” column) is usually the most reliable, but even here errors can occur. When in doubt, consult with the operator before renaming the channels.

3 Data preprocessing

Preprocessing is essential in any mass cytometry analysis process. Key steps in preprocessing include: bead normalization, in which one adjusts for varying instrument performance over time; pre-gating, in which live intact singlet cells are obtained; compensation, which (if applicable) is used to correct for spillover between the signals of different heavy metal isotopes, and debarcoding, which (if applicable) is used to separate different samples in a multiplexed experiment.

For convenience, we here define the different types of channels (columns in the data matrix) used for different purposes:

pregating_channels <- c("Bead", "DNA1", "DNA2", "Dead", "Event_length")

lineage_channels <- c("CD57", "CD19", "CD4", "CD8", "IgD", "CD11c", "CD16", "CD3", "CD38", "CD27", "CD14", "CXCR5", "CCR7", "CD45RA", "CD20", "CD127", "CD33", "CD28", "CD161", "TCRgd", "CD123", "CD56", "HLADR", "CD25")

instrument_channels <- c("Time", "Event_length", "Center", "Offset", "Width", "Residual")3.1 Bead Normalization

Because CyTOF instrument performance may vary over time (both within a single run, but more prominently between different runs), it is important to normalize data in a way that limits the impact of the technical variation. The most commonly employed methods are the Fluidigm normalization (DVS Sciences, 2013) and MATLAB normalization (Finck et al., 2013). Both methods are based on adding beads carrying different heavy metal isotopes to the biological sample, and relying on the beads to leave an extractable signature that can be used to correct signal fluctuations. The Fluidigm normalization method uses a bead “passport” to normalize data to a global standard, while the MATLAB method normalizes using median bead intensities calculated across given experimental data files (e.g. files from different runs on the same machine).

3.1.1 Fluidigm bead normalization

The Fluidigm bead normalization method is based on the calculation of the mean isotope counts of the beads during production. This global bead profile, called the “Bead Passport”, is unique to each lot of beads synthesized and cannot be adjusted by the user. The first step in the normalization process is for the software to distinguish the beads from the cell sample in question. This is performed using all the major isotopes of the beads (Ce140, Eu151, Eu153, Ho165, and Lu175 for the current Fluidigm EQ beads). The normalization factor is calculated as the ratio between the Bead Passport and the measured counts for each of the beads. The bead channels span a large range of the mass spectrum, and using linear interpolation to masses between or beyond the bead masses allows for the calculation of normalization factors that are then multiplied to each remaining mass channel distributed across the course of the run using the time stamp. This allows for correction of decline in instrument sensitivity during the course of running a sample, and also normalization across samples (see figure below). However, if the instrument used to acquire a sample differs from the Bead Passport in overall signal intensity or in sensitivity across the mass range, the Fluidigm normalization will “force” the sample data to conform to the shape and signal of the Passport (see Figure 4, especially CyTOFv1 red line).

3.1.2 MATLAB bead normalization (Finck et al.)

The MATLAB implemented normalization algorithm created by Finck et al. (2013) works by modelling the sample specific drift in count reporting. Briefly, bead singlets are manually identified by biaxial gating of each of the five bead channels against a DNA channel. The intensities of each bead channel over the course of a sample run, are smoothed by calculating local medians in a 500 bead event sliding window. Then, a baseline is calculated by taking the mean of the smoothed intensities for each bead channel. For each bead channel, for each time point, the slope of the linear regression from the channel origin to the given time point is calculated, such that the residual sum of squares between the smoothed bead intensities and the baseline is minimized. For each recorded event, the intensity is multiplied by the slope of corresponding the time point at which is was recorded. Since the MATLAB normalization compares each file to the other files rather than to an externally-determined set of values, there is typically less “forcing” or reshaping of the data than in Fluidigm normalization (see figure below).

The effects of normalization on Raw data

The effects of normalization on Raw data is shown above.

Replicate samples were stained, then pooled and split into individual

aliquots before freezing in FBS+10% DMSO at -80°C as Takahashi et al. On

each day, the sample was thawed on ice, washed 3x in MilliQ water, then

resuspended in MilliQ water containing Fluidigm EQ beads and acquired on

the indicated CyTOF instrument model. The CyTOFv2 was later upgraded to

the v2-to-Helios instrument and another aliquot was run. During data

analysis, the EQ beads were gated separately from the cells. The linear

y-axis represents the Median Di Dual signal intensity of the indicated

mass channel: the Bead data is shown in the top row, while the Cell data

is shown on the bottom row (marker on indicated cell population). While

the Beads and Cells cover a similar mass range, the signal intensity of

the Cells on a specific instrument changes depending on the

normalization method (Raw vs. Fluidigm-ver2 vs. MATLAB).

It should be noted that although the bead normalization is useful for adjusting for signal changes due primarily to the instrument performance, it does have some limitations. First, there may be some decrease in signal intensity in the sample that is not reflected in the bead signal. For example, while proper fixation does generally allow cells to withstand the osmotic force of MilliQ water, users have reported decreases in signal intensity during acquisition of large volume barcoded samples. Since the beads are not affected by lengthy suspension in water, the bead signals would not change in the same way that cell signals may. Generally, samples fixed with paraformaldehyde seem more robust than those fixed only with commercial fix/perm solutions. Additionally, samples are typically more stable as pellets. Therefore, this sample signal decrease can often be limited by breaking one large sample into aliquots that would each make 3-5 mL of diluted sample, and only resuspending the pellet of each aliquot shortly before collection. Second, since the beads are not stained with the assay reagents, they are seldom sufficient for true plate-to-plate batch correction, particularly over long periods of time when reagent lots could change.

3.1.3 Performing bead normalization

Because the MATLAB method is more commonly used in practice, it is the focus on this wiki. The MATLAB normalization method is implemented with a graphical user interface (GUI), in which the user manually gates the beads using five different bi-axial plots in which each bead metal is plotted against the iridium channel (that measures DNA). This can be installed from here.

While wet lab scientists are accustomed to working with manual gating and GUIs, such as the one in the MATLAB normalization program, this is not the preferred method for data scientists such as bioinformaticians, who commonly wish to analyze a large number of samples, making manual gating very cumbersome. CATALYST has enabled this now, but before that was possible, we implemented the MATLAB normalization algorithm in R, such that it may be called from the command-line and run completely without user interference. This process was carried out by translating the MATLAB source code (available from here) and making small alterations. The R version can be downloaded from our Github repository.

The biggest change from the original version is the fact that bead gating is carried out automatically in the R version using a Support Vector Machine (SVM), which was trained for this specific purpose, using the signals of five bead channels and the DNA channel for a total of seven data sets, for which beads had been gated manually by an experienced wet-lab scientist. The SVM was trained in R using svm from the e1071 package. The training data was seven samples, each comprising of 250,000 events, of which 4,068-8,715 were defined as beads. The data for each metal channel had been transformed using the formula ArcSinh(data)/c, in which c = 2 for CyTOF Helios data, and furthermore, each metal channel was normalized to values between 0 and 1 for the SVM training, to ensure maximal comparability between different samples. Consequently, new data to be classified using the developed SVM model should be transformed and normalized before “gating” - this is of course incorporated in the developed script. In order to evaluate the performance, a single-layer cross-validation setup was used, because a preliminary run on a smaller subset using a “out-of-the-box” SVM with a radial kernel, turned out to work satisfactory (mean accuracy of 99.997 %, with a mean precision of 99.920 %, and a mean recall of 99.971 %). The training was carried out using a leave-one-out method, in which six data sets was used for training and one data set was used for testing in each round. This should allow for an unbiased performance measure. Based on the obtained results the model was deemed acceptable, and all data sets were used to train a final model that can be used for future bead-normalization tasks - the developed R script requires this final model to run. Because the seven training samples for the SVM were all from the CyTOF Helios platform, and they all used EQ beads with the five bead isotopes, 140Ce, 151Eu, 153Eu, 165Ho, and 175Lu, the developed method in R only works for data obtained using the EQ beads on a Helios instrument. However, it could potentially easily be extended to handle other data types - it merely requires some training data to develop the proper SVM for gating the given data set type.

3.2 Compensation

Compensation is a method for correcting signal overlap, or “spillover”, between the different channels used in cytomtetry experiments. While being an established method in flow cytometry, a recent study (Chevrier et al., 2018) highlight that mass cytometry experiments are also affected by signal spillover. This leads to noisy expression matrices, and as a consequence, compensation must be applied.

To perform compensation, you need to directly quantify the overlap between isotopes using beads (or cells) stained with a single isotope. Using these single-stained beads it is possible to estimate a spillover matrix from the populations identified as belonging to each isotope, and then one can apply the inverse of the matrix to compensate the data for CyTOF runs through multiplication.

The CATALYST tool offers an implementation of a compensation method, which views spillover as linear (traditional approach) and uses non-negative linear least squares to avoid yielding negative expression values. Additionally, their vignette is quite extensive and helpful.

But how can you tell if your data needs compensation? In reality this may be hard, but one approach could be to use a biaxial plot to look for unexpected correlations between channels in your dataset. E.g. you would usually not expect a correlation between a barcoding channel and a surface marker, but you may expect a correlation between two maturity markers or two activation markers. So if you see something like the leftmost plot below (which shows a barcoding vs. a marker channel), you have a strong indication that something is wrong. Fortunately, it can be corrected using CATALYST to yield the rightmost plot. For this dataset (which is one of our own), none of the single-stained beads were actually stained with 110Pd, and consequently any signal detected in this channel must be spillover.

Compensation example. Left: Uncompensated data with a large spillover from the Cd114 channel to the Pd110 channel. Right: Compensated data.

It is actually important to note here that while what the CyTOF user will observe is a spillover from 114Cd to 110Pd, this does not really reflect what is happening inside the machine. The problem here is that 114Cd is not “clean”, and naturally occuring Cd will also contain some other isotopes including 110Cd (see this Cytoforum discussion). The CyTOF machine is unable to distinguish different isotopes with the same mass and the signal from 110Cd is consequently read as 110Pd.

3.3 Debarcoding

A GUI implementation of the single cell debarcoder may be installed from here or here, but the CATALYST implementation may also be used without the GUI. However, it is still advisable to adjust the barcode separation cut-offs manually, and for this purpose it is necessary to plot the data. The original debarcoding paper was published by Zunder et al. (2013), and the basic principle is that you use combinations of palladium isotopes to label individual samples, which you can then pool and run together on the CyTOF machine to avoid technical variation. To separate the different samples, one must identify events for which the difference in the signal between the present and absent palladium channels is clear.

3.4 Data transformations

3.4.1 Dealing with “randomized” values

When an FCS file is exported by the Helios, non-zero values are randomized negatively and uniformly (]x-1;x]). This means that a count of, for example, 9 will be evenly distributed between 8 and 9 for each channel, for each event. This is mostly done for historical reasons, as visualizing count data from the CyTOF produce different plots than intensity data (from flow cytometers). Randomization is done (by default) during conversion of IMD to FCS by the Fluidigm software. Randomized counts can be converted back to the original value by rounding every decimal up to the nearest whole number.

# Check if counts are randomized (expression matrix should only contain integers)

is.wholenumber <- function(x, tol = .Machine$double.eps^0.5) abs(x - round(x)) < tol

table(is.wholenumber(exprs[,c(pregating_channels, lineage_channels)]))##

## FALSE TRUE

## 4726283 2523717# You may revert back to original counts (only applicable to measured channels - i.e. exclude time, event length, etc.)

exprs_derandomized <- cbind(ceiling(exprs[,c(lineage_channels, pregating_channels[1:4])]), exprs[,instrument_channels])For multidimensional analysis this is definitely recommendable not to add unnecessary noise to the data, but for the purpose of the 2D visualizations we are doing for pre-gating, we will leave the randomized counts for now.

3.4.2 ArcSinh transformation

Commonly, mass cytometry ion counts will be ArcSinh transformed - this transformation will retain linearity in the low end of the count spectrum, but it resembles a log transformation in the high end. If counts are divided by a co-factor before the transformation, the range of ion counts for which linearity is retained may be adjusted. For CyTOF data, a co-factor of 5 is typically used. Transformations are done primarily for visualization purposes and co-factors are empirically determined. In some cases, adjusting co-factors slightly may improve visualizations.

Continuing from the above code snippet for loading an FCS, the data may be ArcSinh-transformed as follows:

library(MASS)

library(RColorBrewer)

library(ggplot2)

library(cowplot)

# Set co-factor

cofac <- 5

# Make arcsinh transformed expression matrix (with the exception of time and event_length, which should remain linear)

exprs_trans <- cbind.data.frame(asinh(exprs[,c(lineage_channels, pregating_channels[1:4])]/cofac), exprs[,instrument_channels])

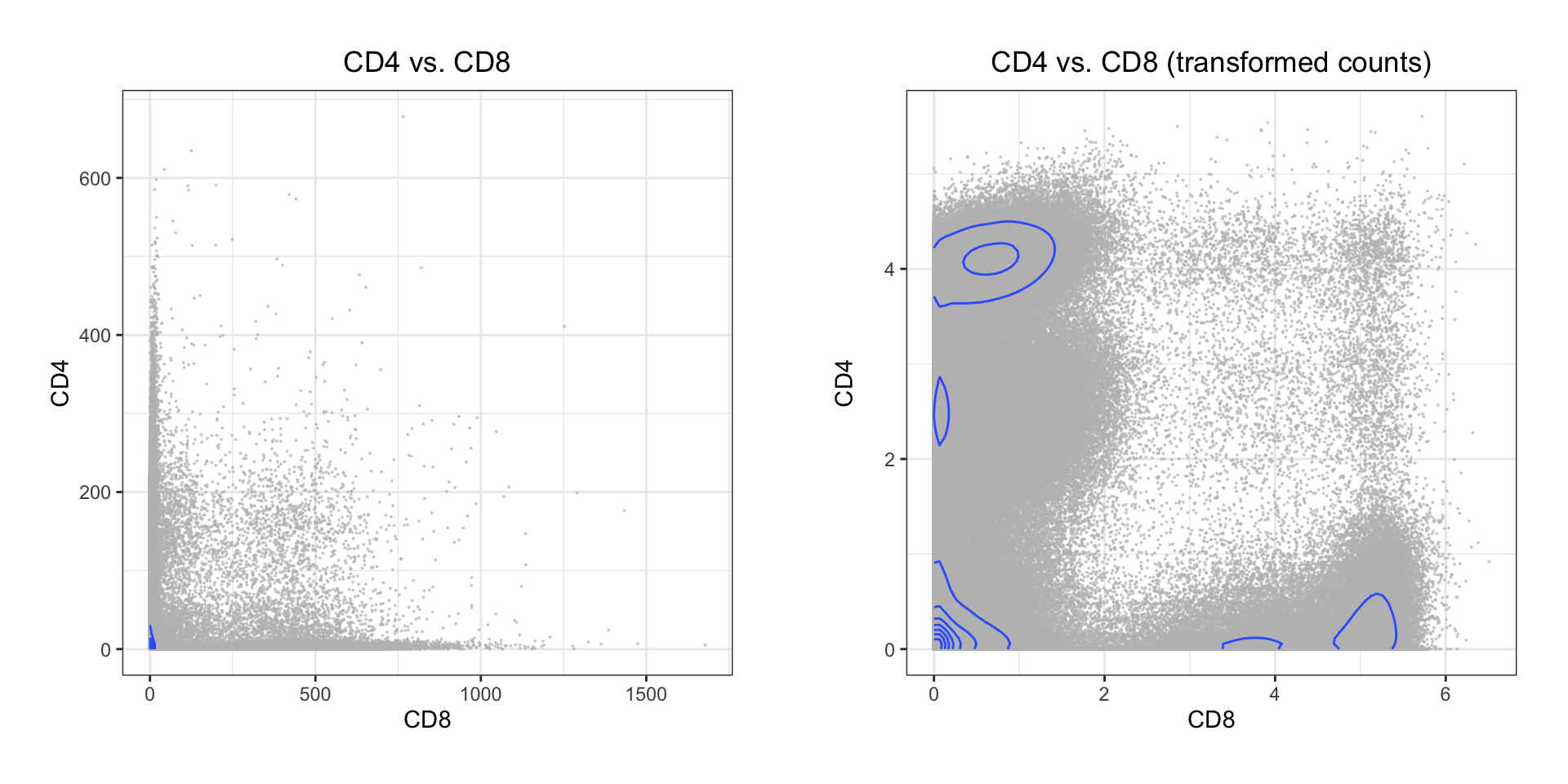

# Plot two-marker example of transformation effect

p1 <- ggplot2::ggplot(as.data.frame(exprs), aes(x = CD8, y = CD4)) +

geom_point(color = 'grey', size = 0.05, alpha = 0.6) +

geom_density2d() +

ggtitle('CD4 vs. CD8') +

theme_bw() +

theme(plot.title = element_text(hjust = 0.5))

p2 <- ggplot2::ggplot(exprs_trans, aes(x = CD8, y = CD4)) +

geom_point(color = 'grey', size = 0.05, alpha = 0.6) +

geom_density2d() +

ggtitle('CD4 vs. CD8 (transformed counts)') +

theme_bw() +

theme(plot.title = element_text(hjust = 0.5))

plot_grid(p1, p2, align = 'v', scale = 0.9)

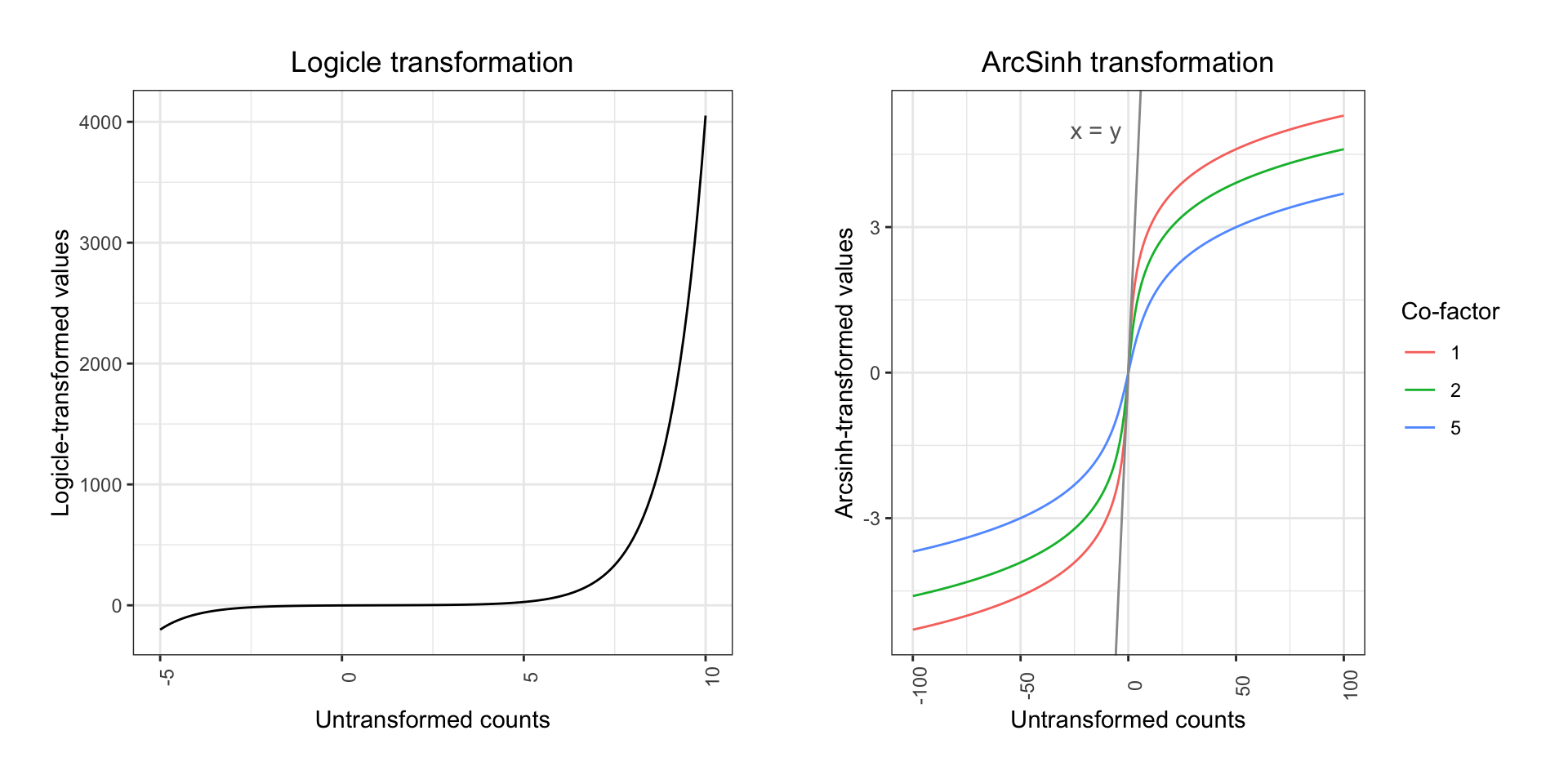

Both the classic Logicle transformation and ArcSinh works by compressing values in the upper end of the spectrum and enhancing the resolution on the lower end:

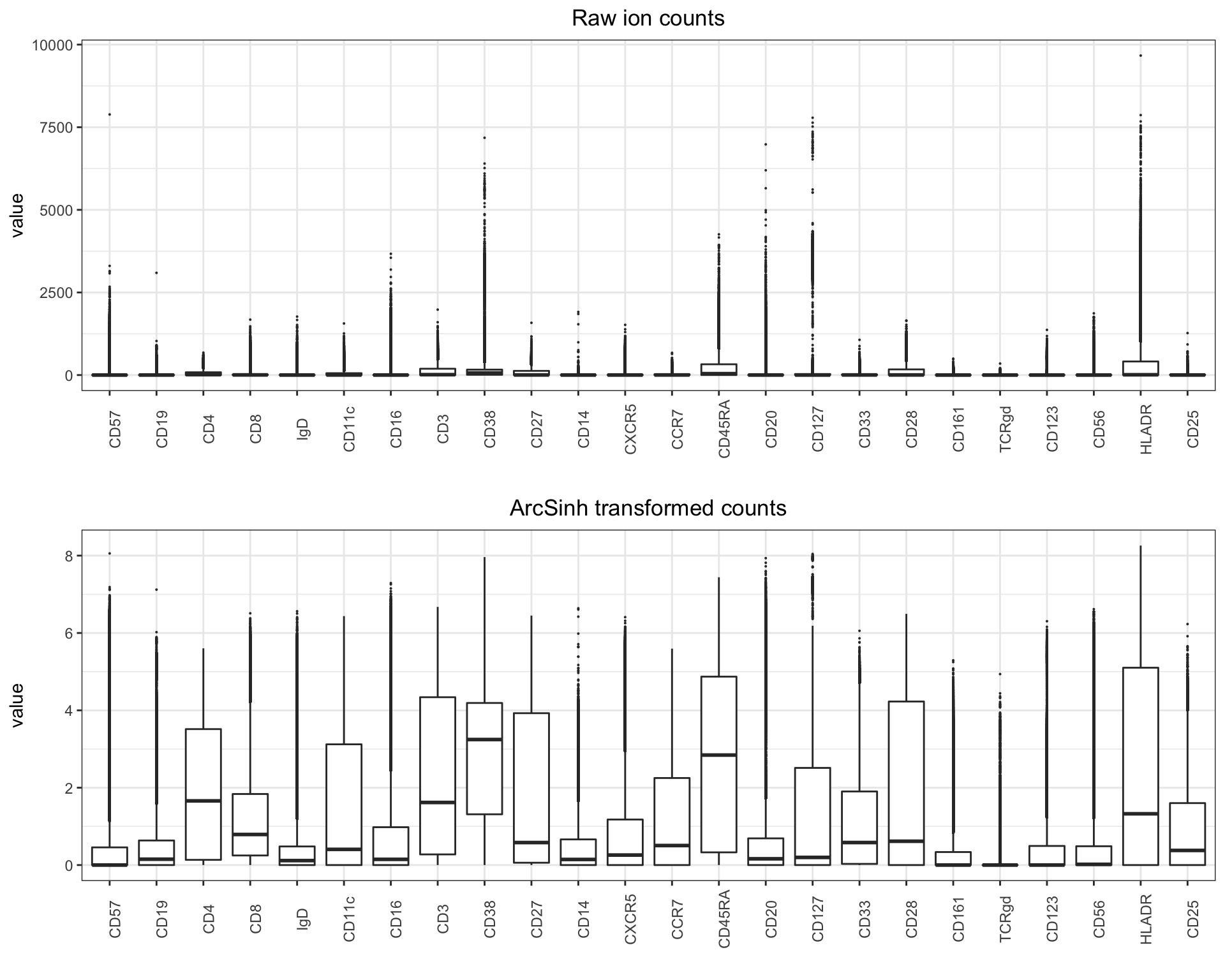

When plotting the variation for each marker before and after transformation, the difference is quite clear:

library(ggplot2)

library(reshape2)

library(cowplot)

melt_exprs <- melt(exprs[,lineage_channels]); melt_exprs_trans <- melt(exprs_trans[,lineage_channels])

p1 <- ggplot2::ggplot(melt_exprs, aes(y = value, x = Var2)) +

geom_boxplot(outlier.size=0.05) +

ggtitle('Raw ion counts') +

theme_bw() + xlab("") +

theme(plot.title = element_text(hjust = 0.5)) +

theme(axis.text.x = element_text(angle = 90, hjust = 0.5))

p2 <- ggplot2::ggplot(melt_exprs_trans, aes(y = value, x = variable)) +

geom_boxplot(outlier.size=0.05) +

ggtitle('ArcSinh transformed counts') +

theme_bw() + xlab("") +

theme(plot.title = element_text(hjust = 0.5)) +

theme(axis.text.x = element_text(angle = 90, hjust = 0.5))

plot_grid(p1, p2, align = 'v', ncol = 1)

3.5 Pre-gating

Pre-gating has the purpose to filter the data to consist only of live, intact, single cells. Traditionally, this is carried out in four steps, carried out for each sample. There are number of ways one can semi or fully automate this process (using unsupervised or supervised machine learning for instance), but for clarity we will perform “old school” pre-gating below - sped up by pre-gating all samples in one go (insofar as samples are comparable to a degree that enables this). In the following sections, we will work on all the samples as a single object, so first we reload the data into a data.frame.

library(flowCore)

# Read in your normalized files and concatenate in a data frame (in this example we subset each matrix to 10,000 random events)

# Remember to set working directory to the normalized samples

fcs_files <- list.files(path = ".", pattern='.fcs$', full=TRUE, ignore.case = TRUE)

exprs_set <- data.frame()

sample <- c()

for (f in fcs_files) {

fcs <- read.FCS(filename=f, transformation=FALSE, truncate_max_range = FALSE)

exprs <- fcs@exprs

markers <- gsub(pattern = ".*_", replacement = "", x = as.vector(fcs@parameters@data$desc))

colnames(exprs)[which(!is.na(markers))] <- markers[which(!is.na(markers))]

set.seed(134)

exprs_set <- rbind(exprs_set, exprs[sample(nrow(exprs), 10000),])

sample <- append(sample, rep(basename(f), 10000))

}

exprs_set$sample <- sample

instrument_channels <- append(instrument_channels, "sample")

# Make arcsinh transformed expression matrix (excluding channels that should remain linear - e.g. time, event length, etc.)

cofac <- 5 # For CyTOF data, the co-factor is set to 5

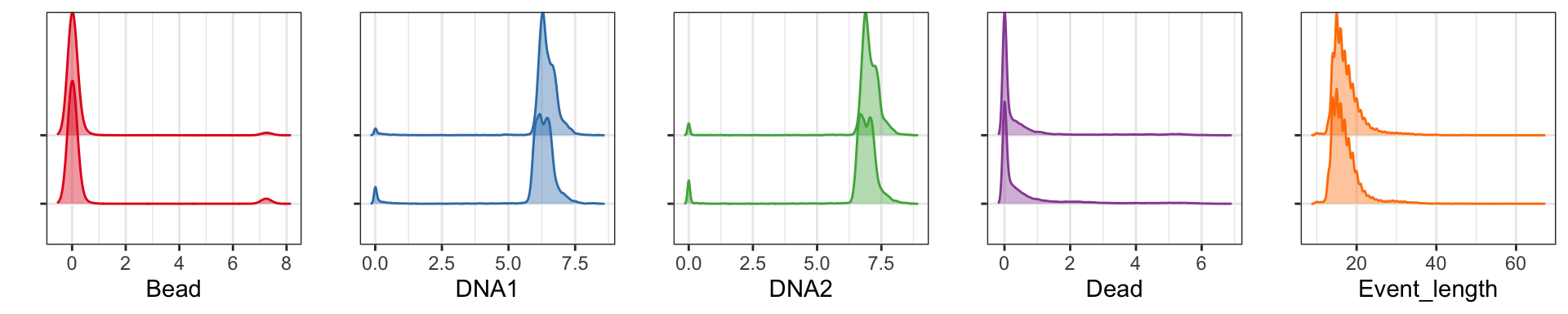

exprs_set_trans <- cbind(asinh(exprs_set[,c(pregating_channels[1:4], lineage_channels)]/cofac), exprs_set[,instrument_channels])Insofar as all samples are run on the same instrument, with the same panel, by the same operator, and bead normalized together, it should be possible to avoid pre-gating all your samples one at the time, and rather doing it in one go. You may check that the distributions of bead, DNA, and live/dead measurements are somewhat similar:

library(RColorBrewer)

library(ggplot2)

library(ggridges)

library(cowplot)

cols <- brewer.pal(5,'Set1')

p <- list()

for (c in 1:length(pregating_channels)) {

p[[c]] <- ggplot2::ggplot(as.data.frame(exprs_set_trans), aes_string(x = pregating_channels[c], y = "sample")) +

geom_density_ridges(color = cols[c], fill = alpha(cols[c], 0.4)) +

theme_bw() + theme(axis.text.y=element_blank()) + ylab("")

}

plot_grid(plotlist = p, ncol = 5)

Here we only have two samples. For the whole healthy PBMC dataset

with 21 samples, the plot looks like this:

As can be seen all five pre-gating channels are largely comparable in all samples, and pre-gating could be done across all samples. However, it is still common to do this on a per-sample basis.

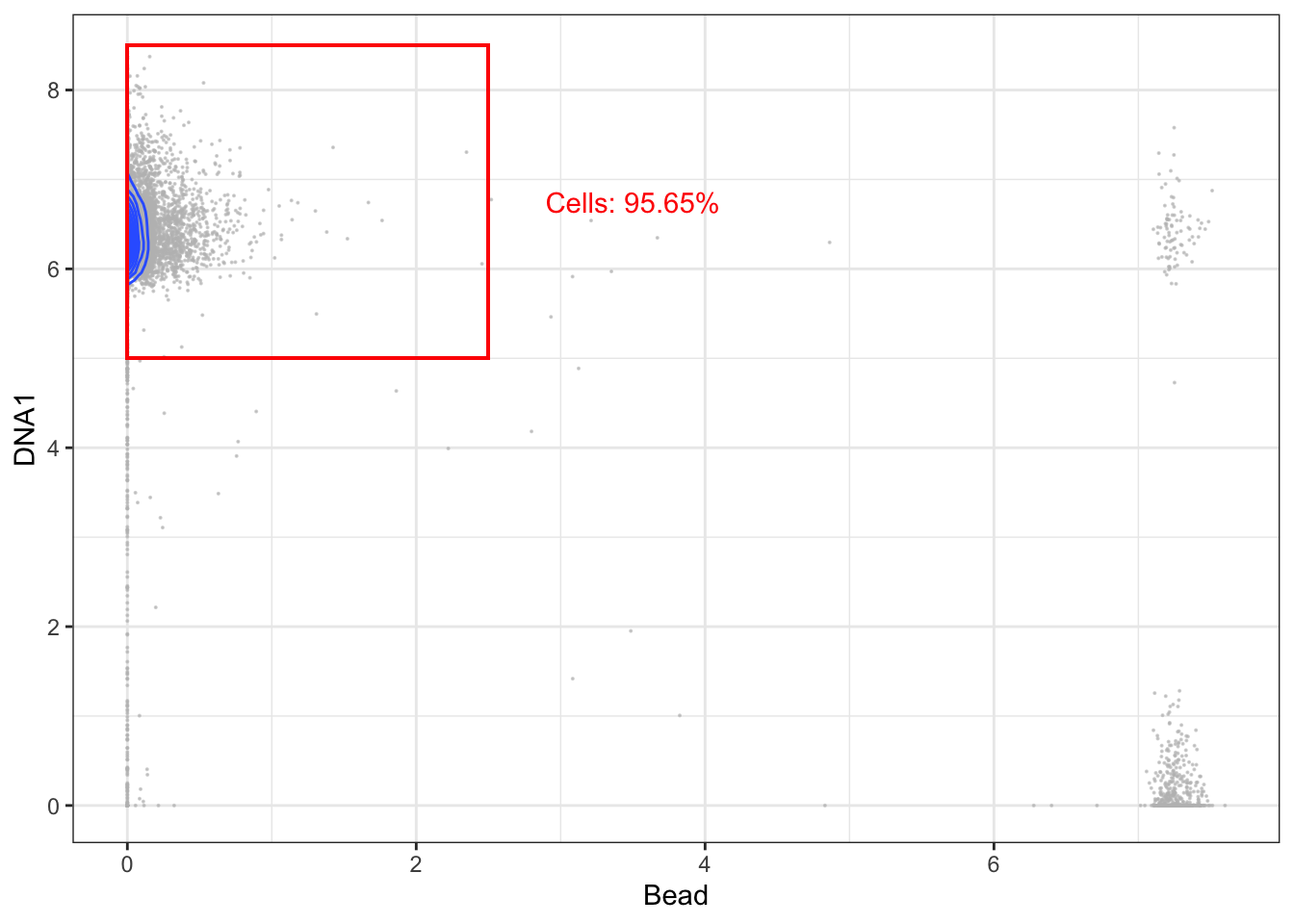

3.5.1 Gating for cells (beads vs. DNA)

The first step is to gate out beads, cell/bead dobulets, and debris. This is done by generating a biaxial plot with a DNA channel (e.g. 191Ir) on one axis and a bead-only isotope (140Ce) on the other. A single bead should be DNA-negative and 140Ce-positive, and cells should be DNA-positive and 140Ce-negative. Double negatives are considered to be debris and double positives are commonly doublets. Below is a code snippet for conducting this step after initializing as described above.

# Load necessary packages

library(ggplot2)

library(MASS)

# Generating a plot for gating visualization

p <- ggplot2::ggplot(exprs_set_trans, aes(x = Bead, y = DNA1)) +

geom_point(color = 'grey', size = 0.05, alpha = 0.6) +

geom_density2d(h = max(bandwidth.nrd(exprs_set_trans[,'Bead']), bandwidth.nrd(exprs_set_trans[,'DNA1']))) +

theme_bw()

# Set gate boundaries - the values should be manually adjusted

left <- 0; right <- 2.5; lower <- 5; upper <- 8.5

# Gating cells

cells <- exprs_set_trans[exprs_set_trans[,"Bead"] < right,]; cells <- cells[cells[,"DNA1"] > lower & cells[,"DNA1"] < upper,]

# Add gate to plot

p + geom_rect(xmin = left, xmax = right, ymin = lower, ymax = upper, fill = NA, linetype = 1, color = 'red') +

annotate('text', x = right + 1, y = mean(c(lower, upper)), label = paste("Cells: ", round((nrow(cells)/nrow(exprs_set_trans))*100, digits=2), "%", sep=""), color = 'red')

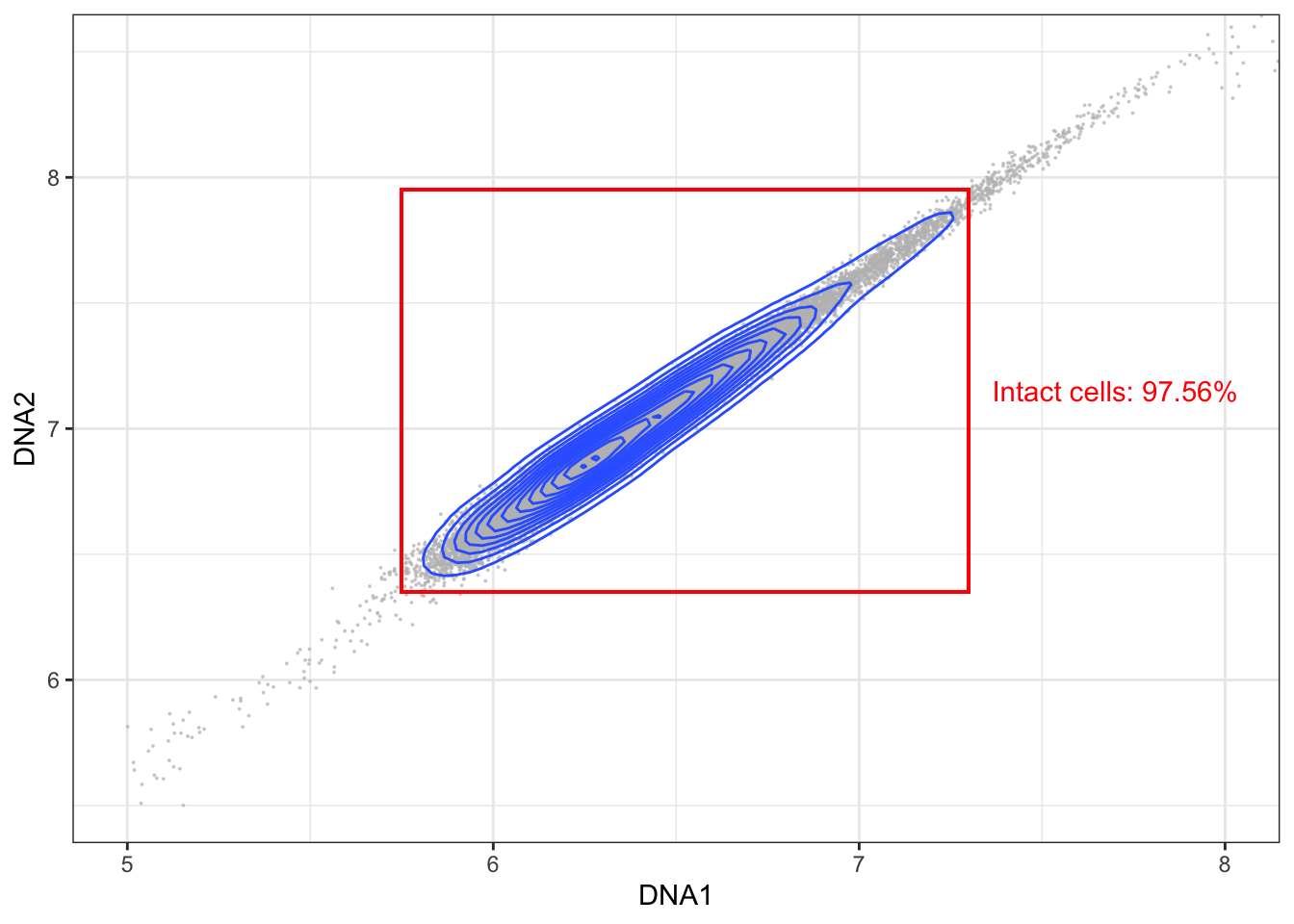

3.5.2 Gating for intact cells (DNA1 vs DNA2)

The second step is to gate for intact cells - usually by generation a bixaial plot with the two DNA channels (191Ir and 193Ir) and gating for events, which express both at a balanced level. If events express only little DNA it is likely debris, but if DNA expression is too high, they are likely cell doublets.

# Gene rating a plot for gating visualization

p <- ggplot2::ggplot(cells, aes(x = DNA1, y = DNA2)) +

geom_point(color = 'grey', size = 0.05, alpha = 0.6) +

coord_cartesian(xlim = c(5,8), ylim = c(5.5,8.5)) +

geom_density2d() +

theme_bw()

# Set gate boundaries - the values should be manually adjusted

left <- 5.75; right <- 7.3; lower <- 6.35; upper <- 7.95

# Gating intact cells

intact <- cells[cells[,"DNA1"] > left & cells[,"DNA1"] < right,]; intact <- intact[intact[,"DNA2"] > lower & intact[,"DNA2"] < upper,]

# Add gate to plot

p + geom_rect(xmin = left, xmax = right, ymin = lower, ymax = upper, fill = NA, linetype = 1, color = 'red') +

annotate('text', x = right + 0.4, y = mean(c(lower, upper)), label = paste("Intact cells: ", round((nrow(intact)/nrow(cells))*100, digits=2), "%", sep=""), color = 'red')

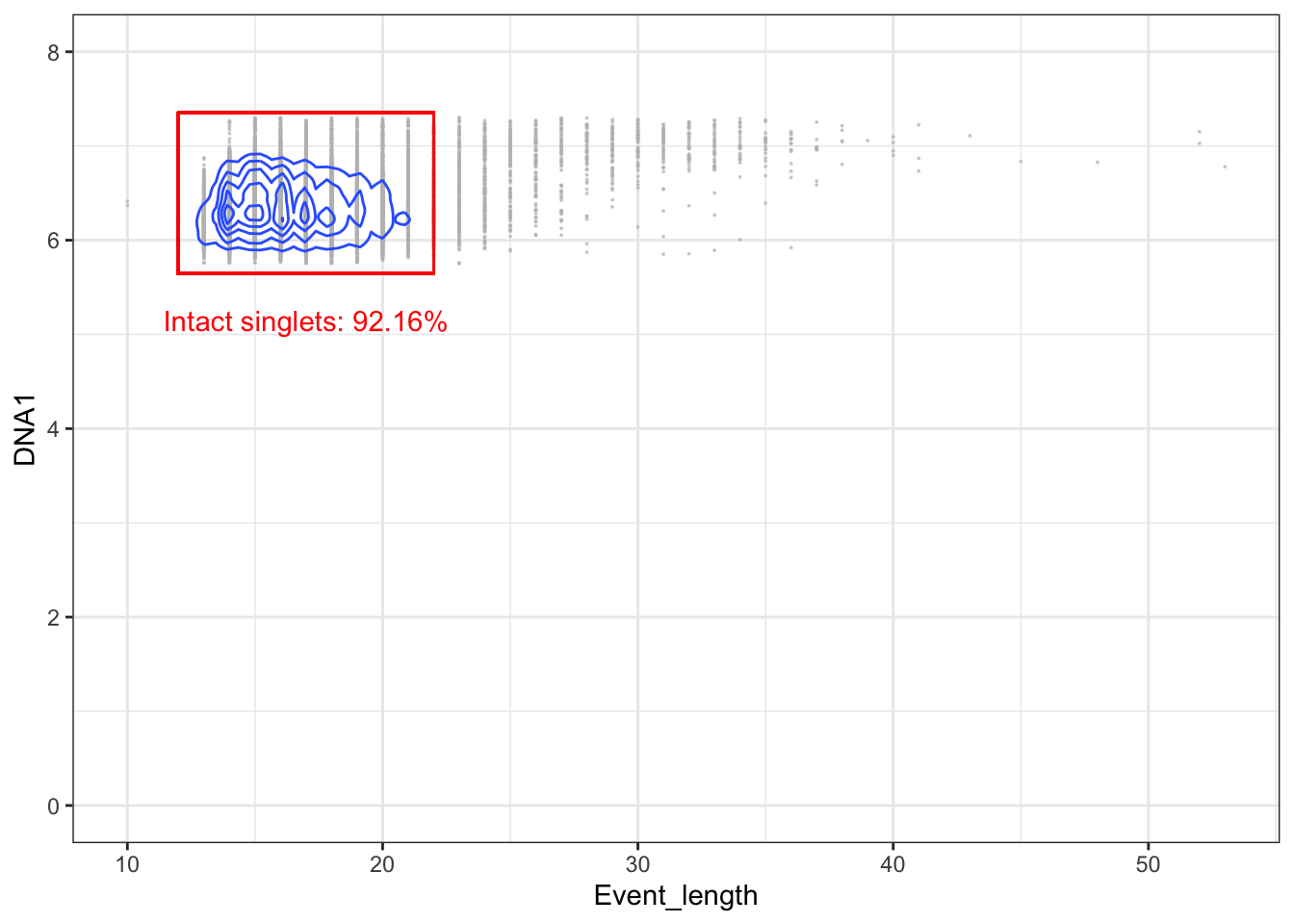

3.5.3 Gating for singlets (event length vs DNA)

The third step is to gate out doublets more specifically. This can be done by biaxially plotting event length against a DNA channel and gating for the events which have a length within the range of most events.

# Generating a plot for gating visualization

p <- ggplot2::ggplot(intact, aes(x = Event_length, y = DNA1)) +

geom_point(color = 'grey', size = 0.05, alpha = 0.6) +

coord_cartesian(ylim = c(0,8)) +

geom_density2d() +

theme_bw()

# Set gate boundaries - the values should be manually adjusted

left <- 12; lower <- 5.65; right <- 22; upper <- 7.35

# Gating intact singlets

singlets <- intact[intact[,"Event_length"] > left & intact[,"Event_length"] < right,]

# Add gate to plot

p + geom_rect(xmin = left, xmax = right, ymin = lower, ymax = upper, fill = NA, linetype = 1, color = 'red') +

annotate('text', x = mean(c(left, right)), y = lower - 0.5, label = paste("Intact singlets: ", round((nrow(singlets)/nrow(intact))*100, digits=2), "%", sep=""), color = 'red')

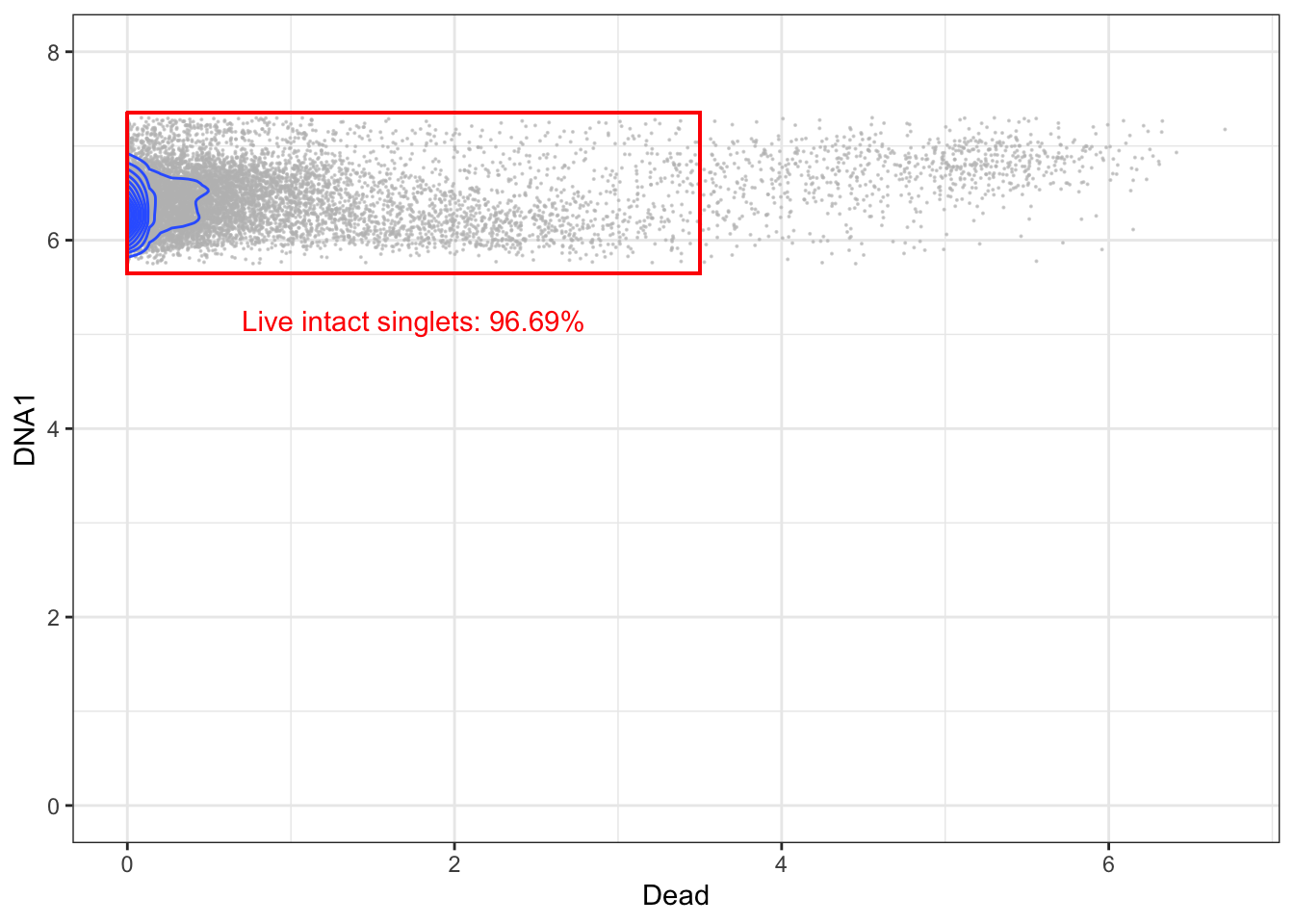

3.5.4 Gating for live cells (live/dead stain vs DNA)

The final step is to remove dead cells. This is typically carried out by using a viability stain. This staining takes place before ligation of antibody-probes. Because dead cells have disrupted membranes, the stain can enter them and form bonds with intracellular molecules. The gating itself is carried out by biaxially plotting the viability stain and a DNA channel and gating out cells with a high amount of staining.

# Generating a plot for gating visualization

p <- ggplot2::ggplot(intact, aes(x = Dead, y = DNA1)) +

geom_point(color = 'grey', size = 0.05, alpha = 0.6) +

coord_cartesian(ylim = c(0,8)) +

geom_density2d() +

theme_bw()

# Set gate boundaries - the values should be manually adjusted

left <- 0; lower <- 5.65; right <- 3.5; upper <- 7.35

# Gating live intact singlets

live <- singlets[singlets[,"Dead"] < right,]

# Add gate to plot

p + geom_rect(xmin = left, xmax = right, ymin = lower, ymax = upper, fill = NA, linetype = 1, color = 'red') +

annotate('text', x = mean(c(left, right)), y = lower - 0.5, label = paste("Live intact singlets: ", round((nrow(live)/nrow(singlets))*100, digits=2), "%", sep=""), color = 'red')

Finally, you can print the recovery percentage for each sample and save the live intact singlets to a new FCS file if you need to.

for (f in basename(fcs_files)) {

print(paste("Total recovery for ", f, ": ", round((nrow(live[live$sample==f,])/nrow(exprs_set_trans[exprs_set_trans$sample==f,]))*100, digits=2), "%", sep=""))

}## [1] "Total recovery for pbmc_healthy_1.fcs: 83.09%"

## [1] "Total recovery for pbmc_healthy_2.fcs: 83.22%"# # Save FCS containing live intact singlets

# ff <- flowFrame(as.matrix(live[,1:(ncol(live)-1)]), fcs@parameters, fcs@description)

# suppressWarnings(write.FCS(ff2, filename = paste("./", file, "live_intact_singlets", sep=""), what="numeric", delimiter = "\\\\"))More automated options for pre-gating can be conceived. One option is to train a supervised model, such as a support vector machine, on a sample for which manual gating has already been carried out. However, in this case it is also necessary to normalize and batch correct the data for alignment of the distributions of the pre-gating channels.

3.6 Batch correction

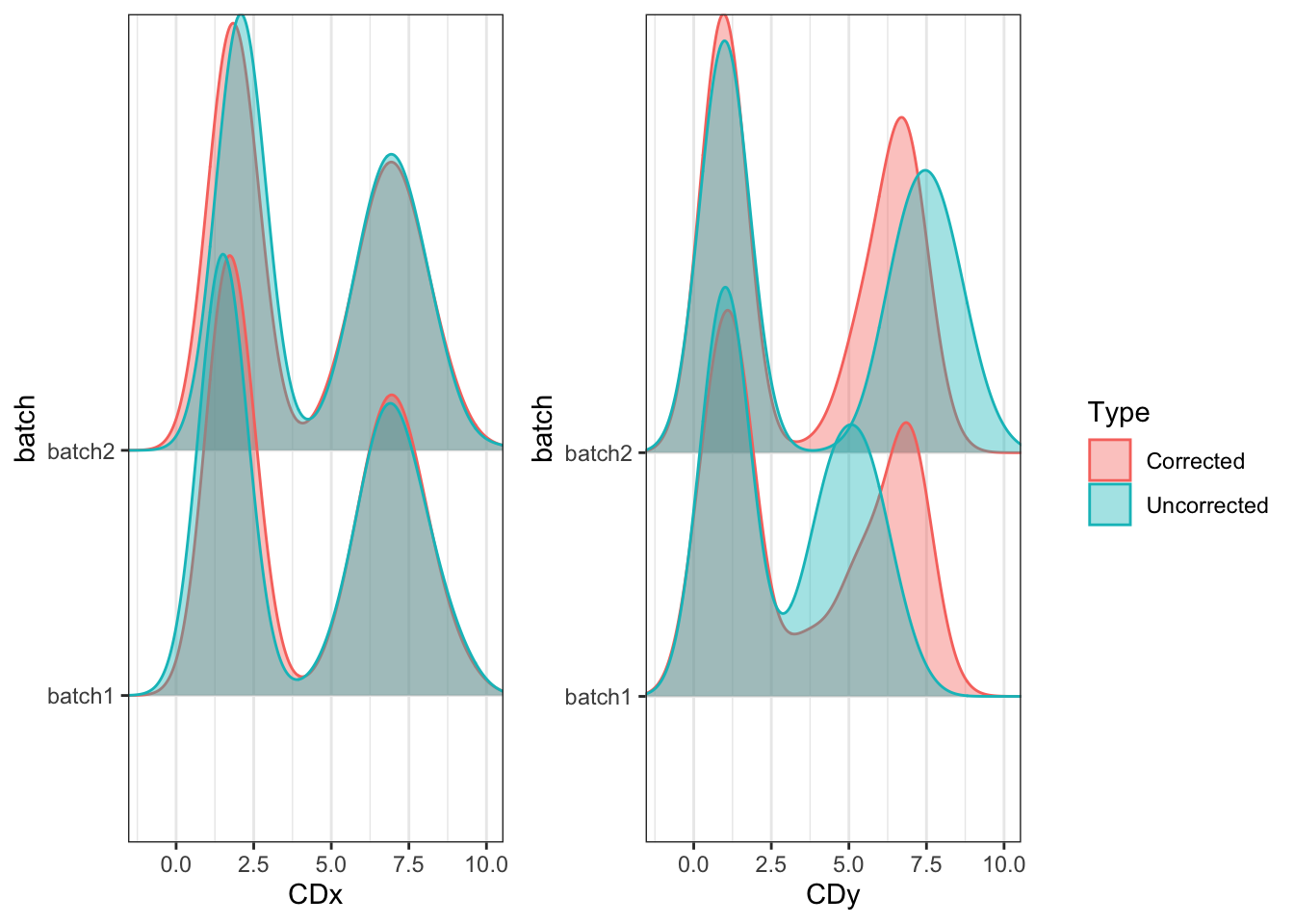

In cases where data was generated in multiple runs or one wishes to compare data from different experiments, there may be unwanted technical variation in the data. In that case, you can correct for batch effects using one of the published tools addressing this in cytometry data. One example is cyCombine, which we have developed, and will briefly demonstrate the use of below. Other, examples include CytoNorm, CytofRUV, iMUBAC, and CyTOFBatchAdjust. A benchmark of these tools is included in the cyCombine publication and much more detailed descriptions of how to use cyCombine - and the other tools - may be found in the cyCombine vignettes.

For the purpose of demonstrating, let us construct a mini example here:

library(cyCombine)

# Create two toy batches of bimodal densities for two toy markers

batch1 <- cbind.data.frame('CDx' = c(rnorm(500,1.5,0.5), rnorm(500,7,1)), 'CDy' = c(rnorm(500,1,0.5), rnorm(500,5,1)), 'batch' = 'batch1')

batch2 <- cbind.data.frame('CDx' = c(rnorm(500,2.1,0.5), rnorm(500,7,1)), 'CDy' = c(rnorm(500,1,0.5), rnorm(500,7.5,1)), 'batch' = 'batch2')

uncorrected <- rbind.data.frame(batch1, batch2)

# Run the normalization

corrected <- batch_correct(uncorrected)

# Visualize the data before and after correction

plot_density(uncorrected, corrected, ncol = 2)

The distributions for CDy are clearly unaligned without correction and we need to correct for this before we can properly analyze them together. cyCombine can help us correct the data so downstream analysis across batches becomes meaningful.

4 Data analysis

4.1 Dimensionality reduction

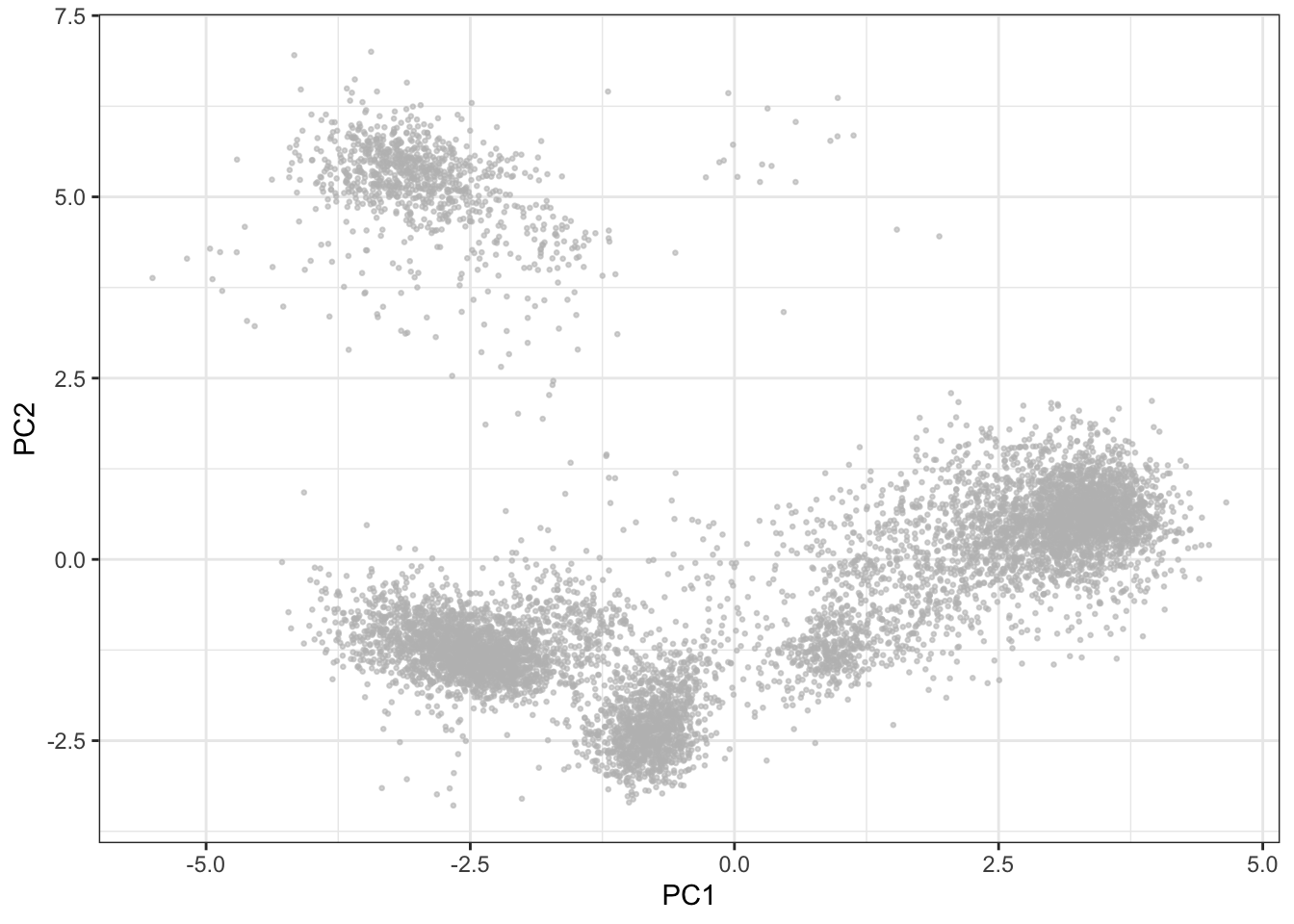

4.1.1 Principal component analysis

The most commonly used algorithm for dimensionality reduction in the biological sciences is principal component analysis (PCA). For mass cytometry data, a PCA will often capture 40-50% of the variance on the first 2-3 PCs, but since the distance between events is linear, data existing on a nonlinear manifold will be misrepresented (Chester & Maecker 2015). Whether this is an issue with CyTOF data or not depends heavily on the source of data. For example, as cells in the hematopoietic system develop in a hierarchical structure, the transition between some cells will result in a continuum rather than distinct clusters, which may not be entirely captured by PCA.

fcs_files <- basename(fcs_files)

pca <- prcomp(live[live$sample==fcs_files[1],lineage_channels], scale. = TRUE)

ggplot2::ggplot(as.data.frame(pca$x), aes(x = PC1, y = PC2)) +

geom_point(color = "grey", alpha = 0.6, size = 0.5) +

theme_bw()

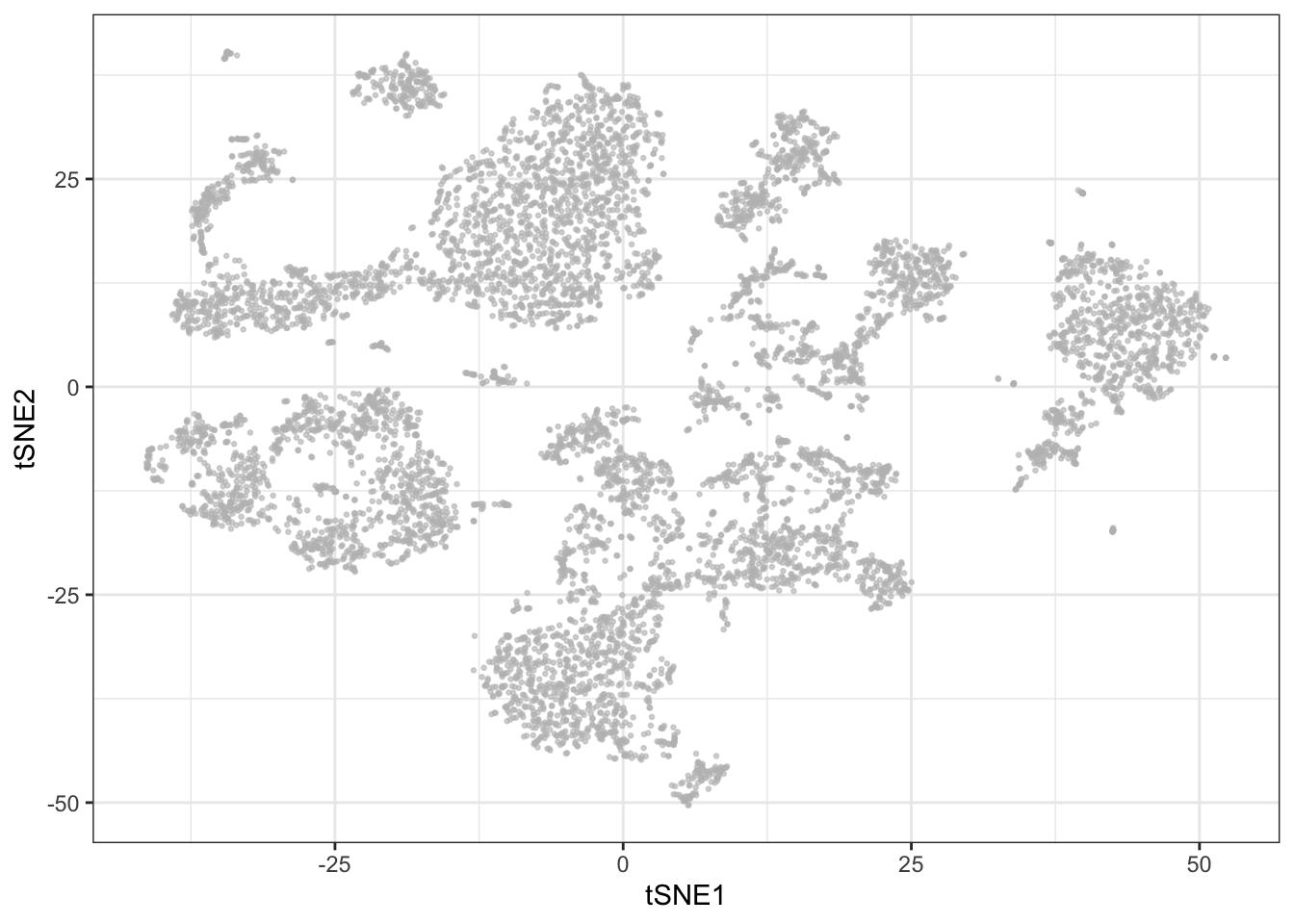

4.1.2 t-SNE

The t-Distributed Stochastic Neighbor Embedding (t-SNE) dimensionality reduction algorithm is a popular tool for dimensionality reduction of cytometry data (van der Maaten and Hinton, 2008). The distance between data points are represented by a t-distribution, meaning that multidimensional distances are preserved to a greater degree than PCA. However, there are a number of features of the algorithm that can lead to misinterpretation by the unaware user. For example, t-SNE (intentionally) does not preserve distances nor density, and it only preserves nearest-neighbors if reasonable hyperparameters are selected. This means that the sizes of the clusters are not always indicative of the variability within the cluster, and similarly, the distance between two clusters is not always proportional to how different they really are. In this regard, the algorithm is quite sensitive to the user making an informed choice of the perplexity and epsilon hyperparameters to reasonably represent the underlying structure of the data (See Martin Wattenberg et al.’s excellent blog post on how to use t-SNE efficiently.). Another feature that may be considered a disadvantage to some is that t-SNE depends on a random start and is non-deterministic even on the same data unless the same seed and iteration limit is used for each run. Additionally, removing or adding even a few data points from the input set will affect the layout of the remaining events, and consequently it is not directly possible to add samples to an existing t-SNE plot. Lastly, t-SNE does not allow for identical events, which, in the case of immune profiling where one would expect at least some identical cells in each cluster, means discarding or scrambling data. Dimensionality reduction definitely has a place in mass cytometry analysis pipelines, but for these reasons, it should be limited to exploratory and/or post-analysis visualizations. t-SNE is implemented in a number of languages including R, Python, C++, etc., and as a plug-in to FlowJo X. The MATLAB implementation of t-SNE often used for mass cytometry data in GUI pipelines is referred to as viSNE (Amir et al., 2013).

Before running t-SNE, it is necessary to remove identical events (if any) from the sample (duplicate rows).

table(duplicated(live[live$sample==fcs_files[1],lineage_channels]))##

## FALSE

## 8309# No duplicates here, but if so, they can be removed:

live <- live[!duplicated(live[live$sample==fcs_files[1],lineage_channels]),]library(Rtsne)

set.seed(42)

tsne <- Rtsne(live[live$sample==fcs_files[1],lineage_channels])

ggplot2::ggplot(as.data.frame(tsne$Y), aes(x = V1, y = V2)) +

geom_point(color = "grey", alpha = 0.6, size = 0.5) +

xlab('tSNE1') + ylab('tSNE2') +

theme_bw()

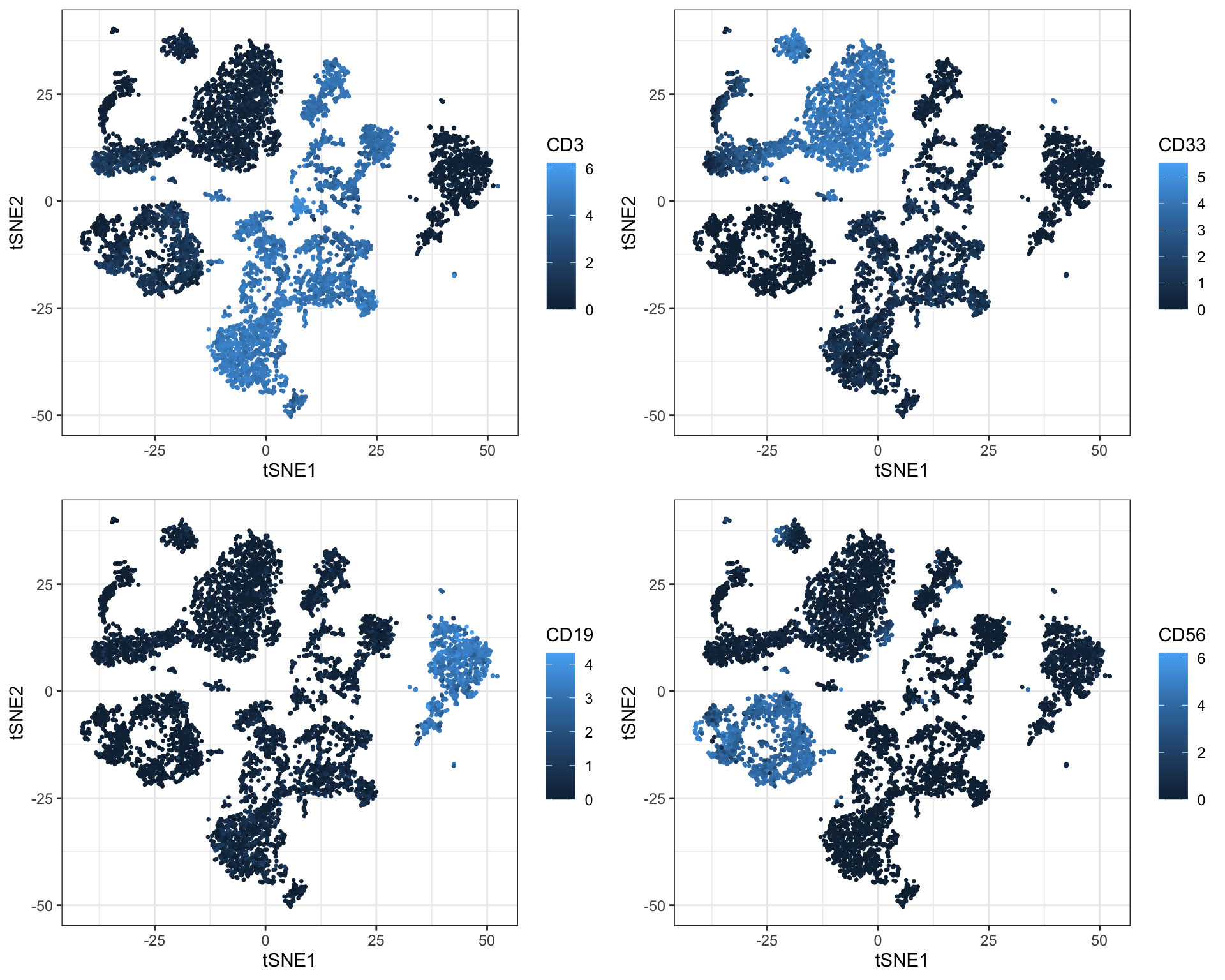

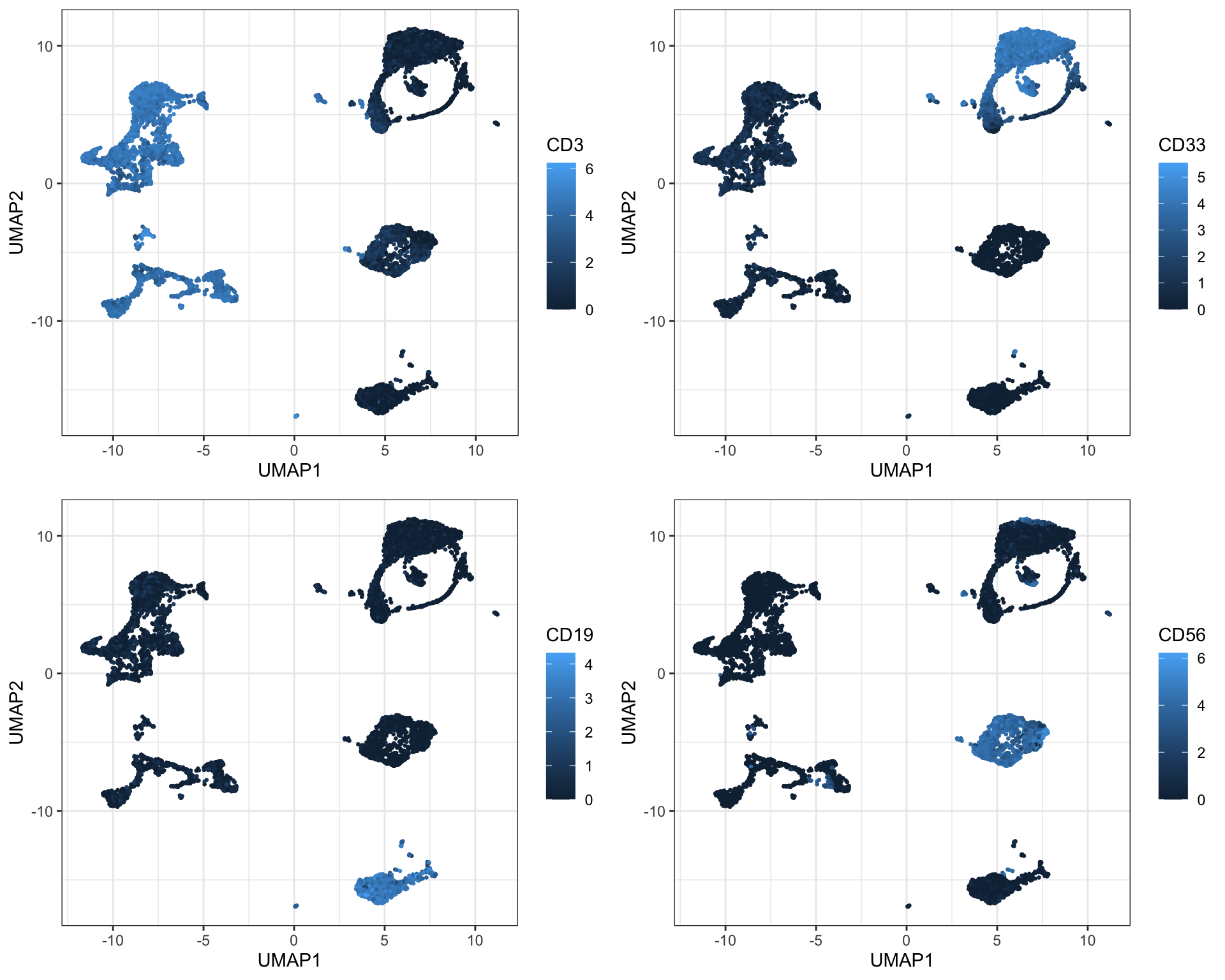

Even before clustering, it is possible to get some sense of the cell composition in a sample by selectively coloring cells by marker expression on the t-SNE plot:

library(reshape2)

sub <- cbind.data.frame(live[live$sample==fcs_files[1],], tsne$Y); colnames(sub)[(ncol(sub)-1):ncol(sub)] <- c('tSNE1', 'tSNE2')

p <- list()

for (c in c("CD3", "CD33", "CD19", "CD56")) {

p[[c]] <- ggplot2::ggplot(sub, aes(x = tSNE1, y = tSNE2)) +

geom_point(aes_string(color = c), size = 0.5) +

theme_bw()

}

plot_grid(plotlist = p, ncol = 2)

4.1.3 UMAP

Uniform Manifold Approximation and Projection (UMAP) is a newer addition to the family of CyTOF-applicable dimensionality reduction methods (Becht et al., 2018). It works in a fashion that is more similar to t-SNE than PCA, which means that it also views each cell as a member of a neighborhood of similar cells. However, it has several advantages over t-SNE. The first one is speed. For a small dataset there may not be a significant difference, bu you will surely notice it for a million cells. Furthermore, UMAP preserves global distances in the data a lot better than t-SNE. Recall that distances have no meaning in a t-SNE plot - you can not say anything about how different clusters are with t-SNE and distances between clusters tend to be fairly constant. In UMAP plots, distances once again have meaning, although they are not linear and directly interpretable as for PCA.

UMAP, like t-SNE also requires setting a seed for each run to make it reproducible. UMAP is implemented in several languages including R and Python.

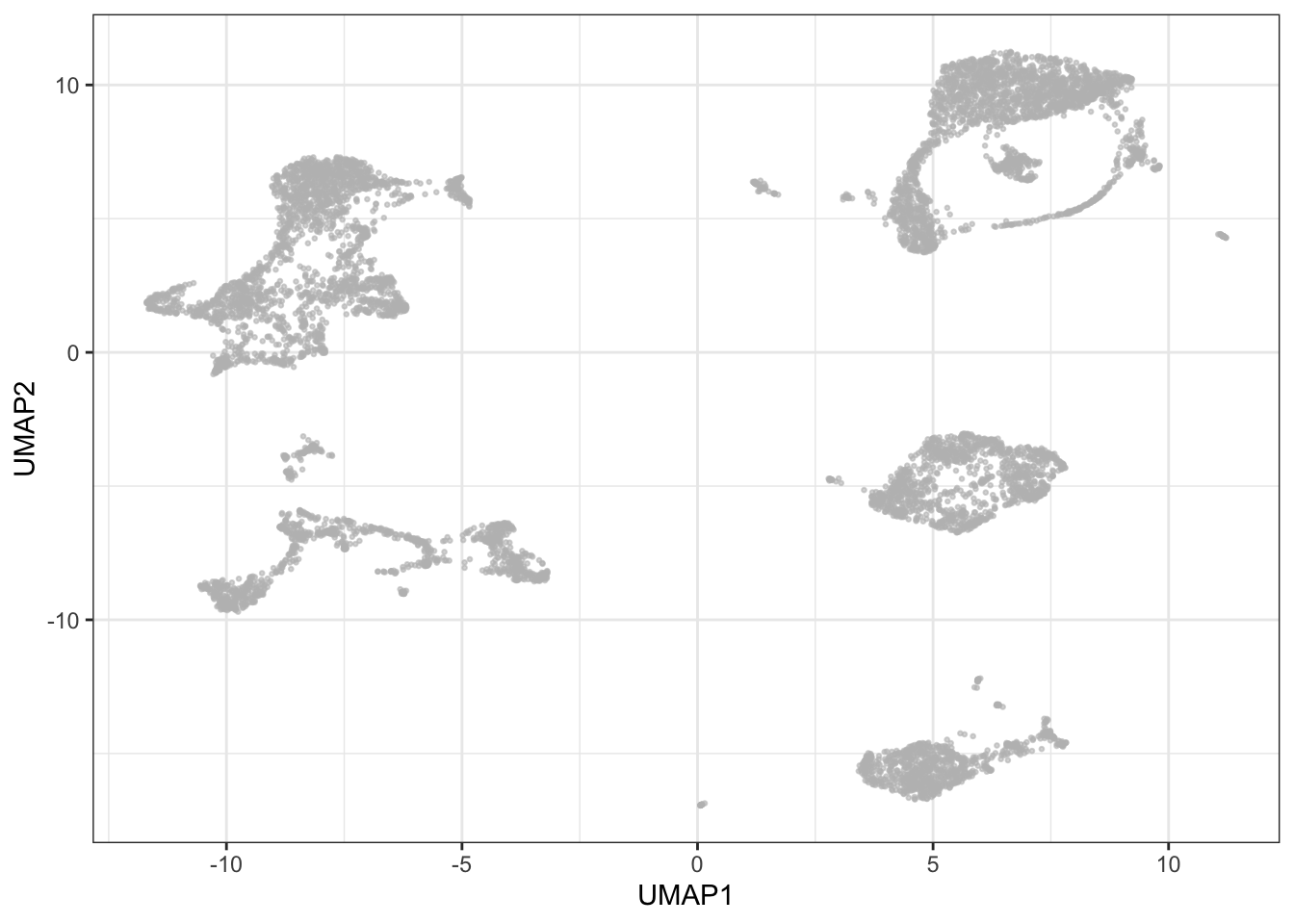

library(uwot)## Warning: pakke 'Matrix' blev bygget under R version 4.1.2set.seed(758)

umap <- umap(live[live$sample==fcs_files[1],lineage_channels], n_neighbors = 15, min_dist = 0.2, metric = 'euclidean')

ggplot2::ggplot(as.data.frame(umap), aes(x = V1, y = V2)) +

geom_point(color = "grey", alpha = 0.6, size = 0.5) +

xlab('UMAP1') + ylab('UMAP2') +

theme_bw()

Again, it is possible to get a pretty good insight into cell composition in a sample by selectively coloring cells by marker expression on the UMAP plot - you will also notice how this is similar to the patterns seen with t-SNE:

sub <- cbind.data.frame(live[live$sample==fcs_files[1],], umap); colnames(sub)[(ncol(sub)-1):ncol(sub)] <- c('UMAP1', 'UMAP2')

p <- list()

for (c in c("CD3", "CD33", "CD19", "CD56")) {

p[[c]] <- ggplot(sub, aes(x = UMAP1, y = UMAP2)) +

geom_point(aes_string(color = c), size = 0.5) +

theme_bw()

}

plot_grid(plotlist = p, ncol = 2)

4.2 Cell subset detection (clustering)

Deconvoluting a cell mixture may be a goal in itself, and certainly a necessity for meaningful comparison of samples or cell populations whether transversal or longitudinal. The traditional approach relies on manual gating on biaxial plots, but as the number of features are increased, the manual strategy becomes increasingly laborious. A number of methods are therefore seeking to automate or semi-automate the process - not only in the interest of speed, but unsupervised methods may also reveal cell populations overlooked when following standard gating strategies.

Automated strategies are usually based on some form of clustering. Since the hematopoietic continuum is a cellular hierarchy, the obvious first choice is simply hierarchical clustering. However, this method is by some considered suboptimal, usually ascribed to the need to manually select the number of clusters based on a distance cutoff in the resulting dendrogram. This forces the user to guess the number of populations in the sample: selecting too many will over-separate the data into meaningless small clusters; selecting too few will agglomerate smaller, but potentially interesting clusters into larger ones. Additionally, with a computational complexity of O(n) and a typical yield of ~200.000 cells per sample, this can be a time consuming algorithm to run on CyTOF data. However, multiple tools still utilize hierarchical clustering in their algorithms. SPADE (Qiu et al. 2011), for example, overcomes the computational challenges and the risk of missing rare populations by performing density dependent down-sampling before hierarchical clustering.

Phenograph (automatically decides the optimal number of clusters) and FlowSOM (you need to provide an informed guess of the number of clusters) were recently shown to be the best performing algorithms in a comparison of the main algorithms by Weber and Robinson 2016. It is of course worth mentioning that there are many other methods available (eg. ACCENSE and clusterX). We will also briefly touch upon a few tools such as e.g. X-shift (Samusik et al. 2016), or force-directed layout-based methods, e.g. SCAFFoLD (Spitzer et al. 2015), which currently do not have R command based interfaces, as well as cover tools with integrated clustering (e.g. SPADE and citrus) in greater depth in later sections.

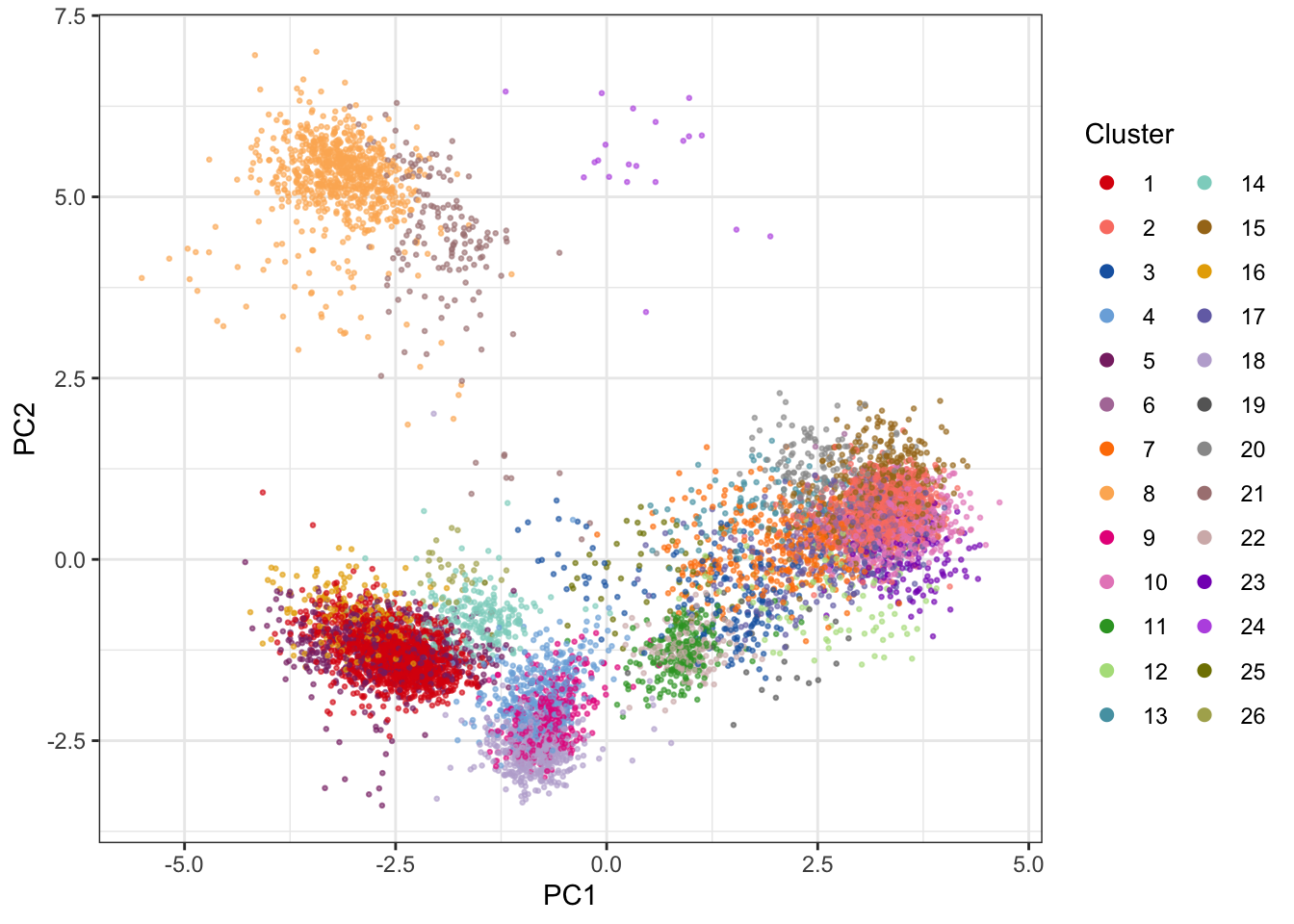

4.2.1 Phenograph

Phenograph is an algorithm which directly assigns single cells to a particular cluster while taking the all measured dimensions into account. Phenograph employs a k-nearest neighbor graph (k-NNG), in which each cell is a node that is connected to its nearest neighbors by edges, to cluster cells into phenotypically similar groups - cellular populations. Clusters consist of nodes with high inter-connectivity. Phenograph is implemented in the R package “cytofkit2”.

Phenograph also requires removal of duplicates (see t-SNE section above).

library(cytofkit2)

clusters_pg <- cytof_cluster(xdata = live[live$sample==fcs_files[1],lineage_channels], method = "Rphenograph")# Visualize results on PCA (using nice colors from CATALYST)

cols <- CATALYST:::.cluster_cols

plot_df <- cbind.data.frame(pca$x, clusters_pg); plot_df$clusters_pg <- as.factor(plot_df$clusters_pg)

ggplot2::ggplot(plot_df, aes(x = PC1, y = PC2)) +

geom_point(aes(color = clusters_pg), alpha = 0.6, size = 0.5) +

guides(colour = guide_legend(override.aes = list(alpha = 1, size = 2), ncol = 2, title = 'Cluster')) +

scale_color_manual(values = cols) +

theme_bw()

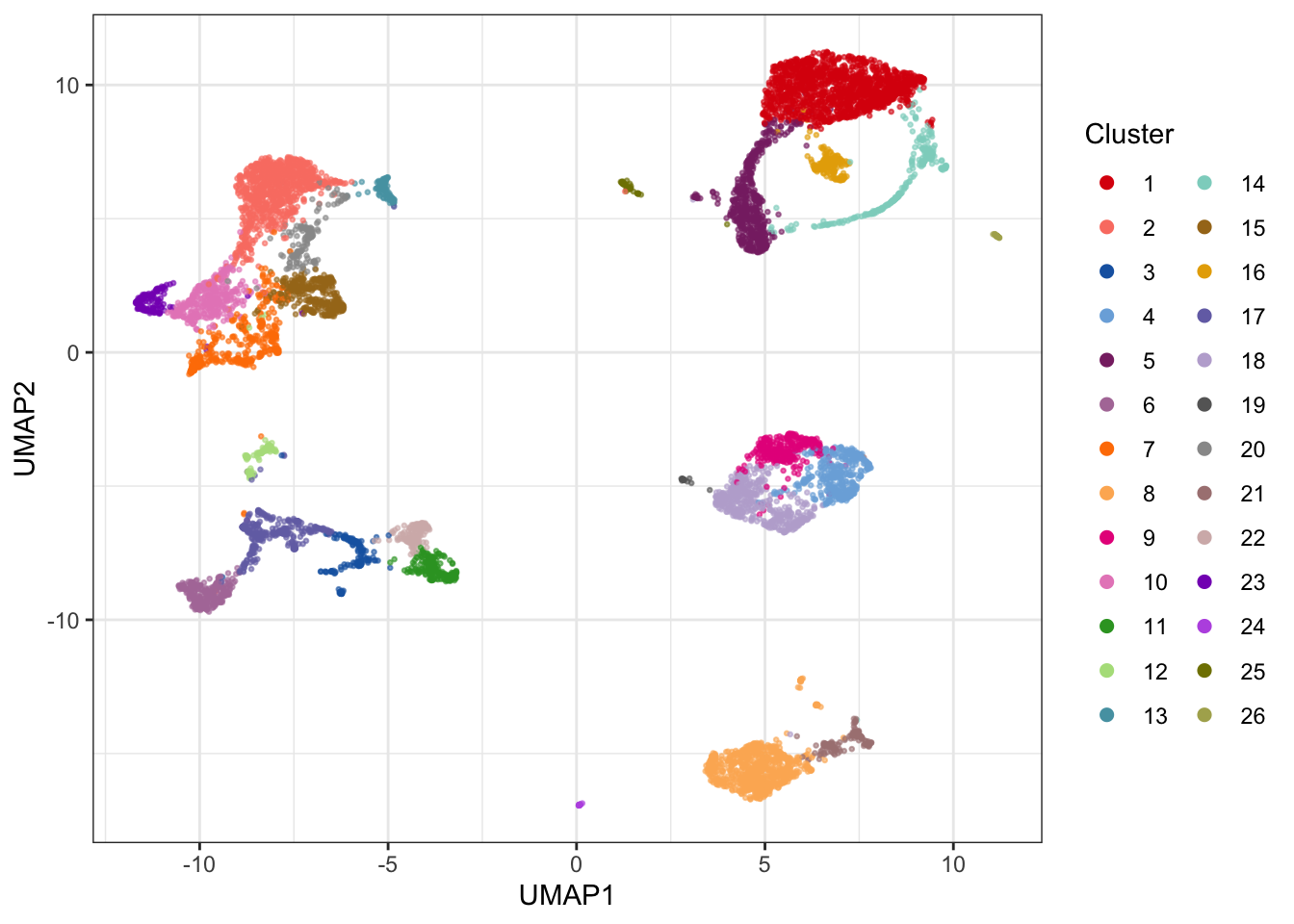

The 2D PCA visualization doesn’t provide good visual separation of clusters. Adding an extra PC adds a significant amount of visual resolution to the clustering, but there are better solutions - e.g. using UMAP.

# Visualize results on UMAP plot using nice colors from CATALYST

cols <- CATALYST:::.cluster_cols

plot_df <- cbind.data.frame(umap, clusters_pg); plot_df$clusters_pg <- as.factor(plot_df$clusters_pg); colnames(plot_df)[1:2] <- paste0('UMAP', 1:2)

ggplot2::ggplot(plot_df, aes(x = UMAP1, y = UMAP2)) +

geom_point(aes(color = clusters_pg), alpha = 0.6, size = 0.5) +

guides(colour = guide_legend(override.aes = list(alpha = 1, size = 2), ncol = 2, title = 'Cluster')) +

scale_color_manual(values = cols) +

theme_bw()

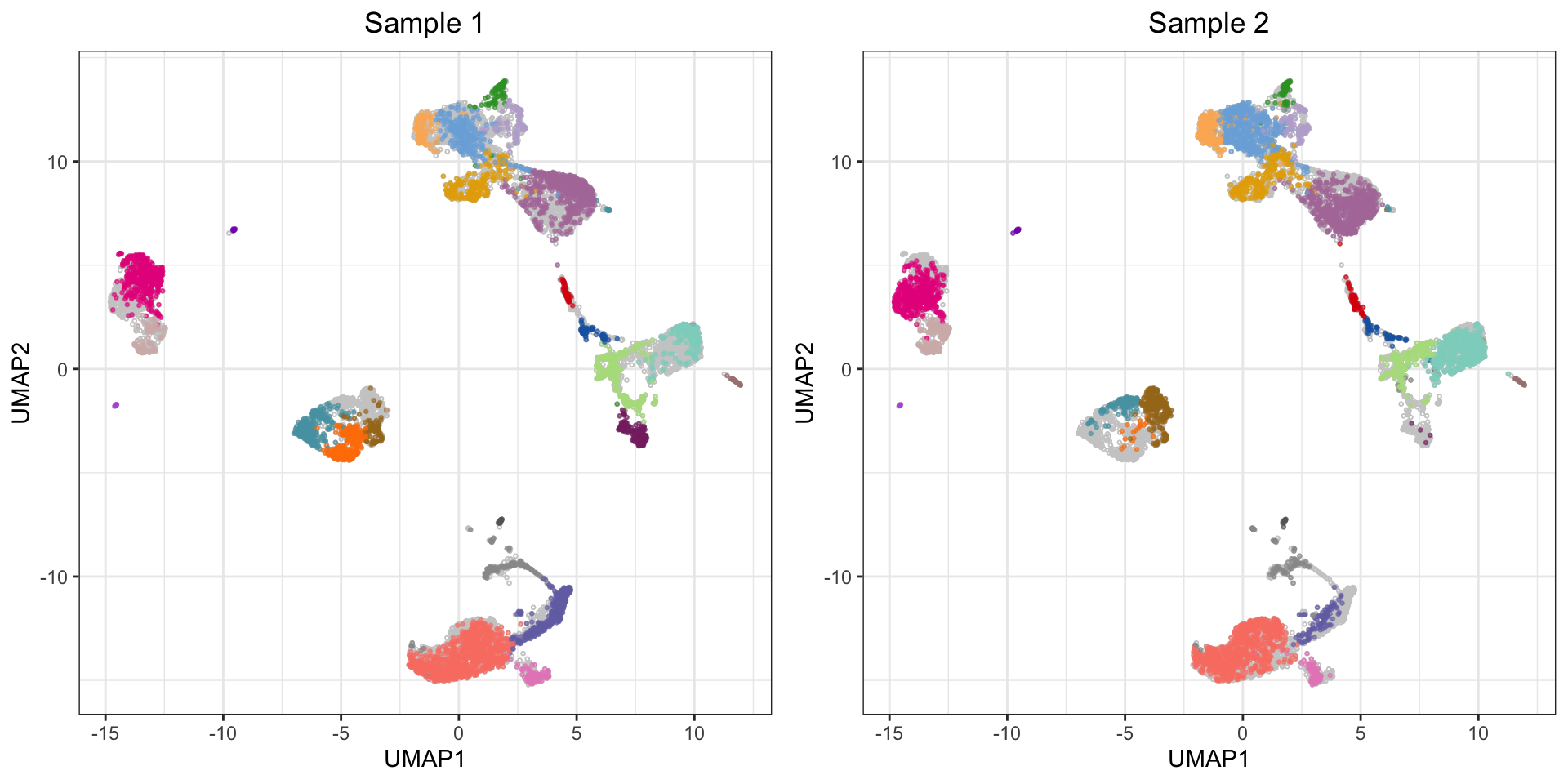

If you want to see how multiple samples compare with clustering and UMAP, one approach is to pool events from multiple samples, cluster them together, and plot them individually on in the UMAP layout which was generated based on both samples (recall that UMAP can potentially produce vastly different layouts if samples are run separately). Below is an example with two samples:

# Sample 5000 random events from each sample

sub <- NULL

for(i in 1:2) {

temp <- live[live$sample==fcs_files[i],]

set.seed(58)

sub <- rbind(sub, temp[sample(nrow(temp), 5000, replace=FALSE),])

}

# Cluster using phenograph

clusters_pg_2 <- cytof_cluster(xdata = sub[,lineage_channels], method = "Rphenograph")

# Run UMAP

set.seed(135)

umap_2 <- umap(sub[,lineage_channels], n_neighbors = 15, min_dist = 0.2, metric = 'euclidean')

# Get nice color scale from CATALYST

cols <- CATALYST:::.cluster_cols

# Plot each sample on UMAP canvas consisting of all events

plot_df <- cbind.data.frame(umap_2, clusters_pg_2, sub$sample); plot_df$clusters_pg_2 <- as.factor(plot_df$clusters_pg_2); colnames(plot_df) <- c(paste0('UMAP', 1:2), 'Cluster', 'Sample')

p <- list()

for (s in 1:2) {

p[[s]] <- ggplot2::ggplot(plot_df[plot_df$Sample==unique(plot_df$Sample)[s],], aes(x = UMAP1, y = UMAP2)) +

geom_point(data = plot_df, pch = 21, color = 'grey80', size = 0.5) +

geom_point(aes(color = Cluster), alpha = 0.6, size = 0.5, show.legend = F) +

scale_color_manual(values = cols) +

ggtitle(paste('Sample', s)) +

theme_bw() +

theme(plot.title = element_text(hjust = 0.5))

}

plot_grid(plotlist = p, ncol = 2)

4.2.2 FlowSOM clustering

FlowSOM is a method for clustering and visualization of flow and mass cytometry data. FlowSOM uses two levels of clustering to visualize how the intensity of cell type markers varies across the populations, and the amount of clusters used is quite high to ensure a visualization of even small subpopulations.

A standalone R package for FlowSOM enables clustering, SOM, and MST visualizations. The package is built to run on FCS files rather than expression matrices, so here we will use the FlowSOM clustering implementation from the cytofkit2 package.

library(cytofkit2)

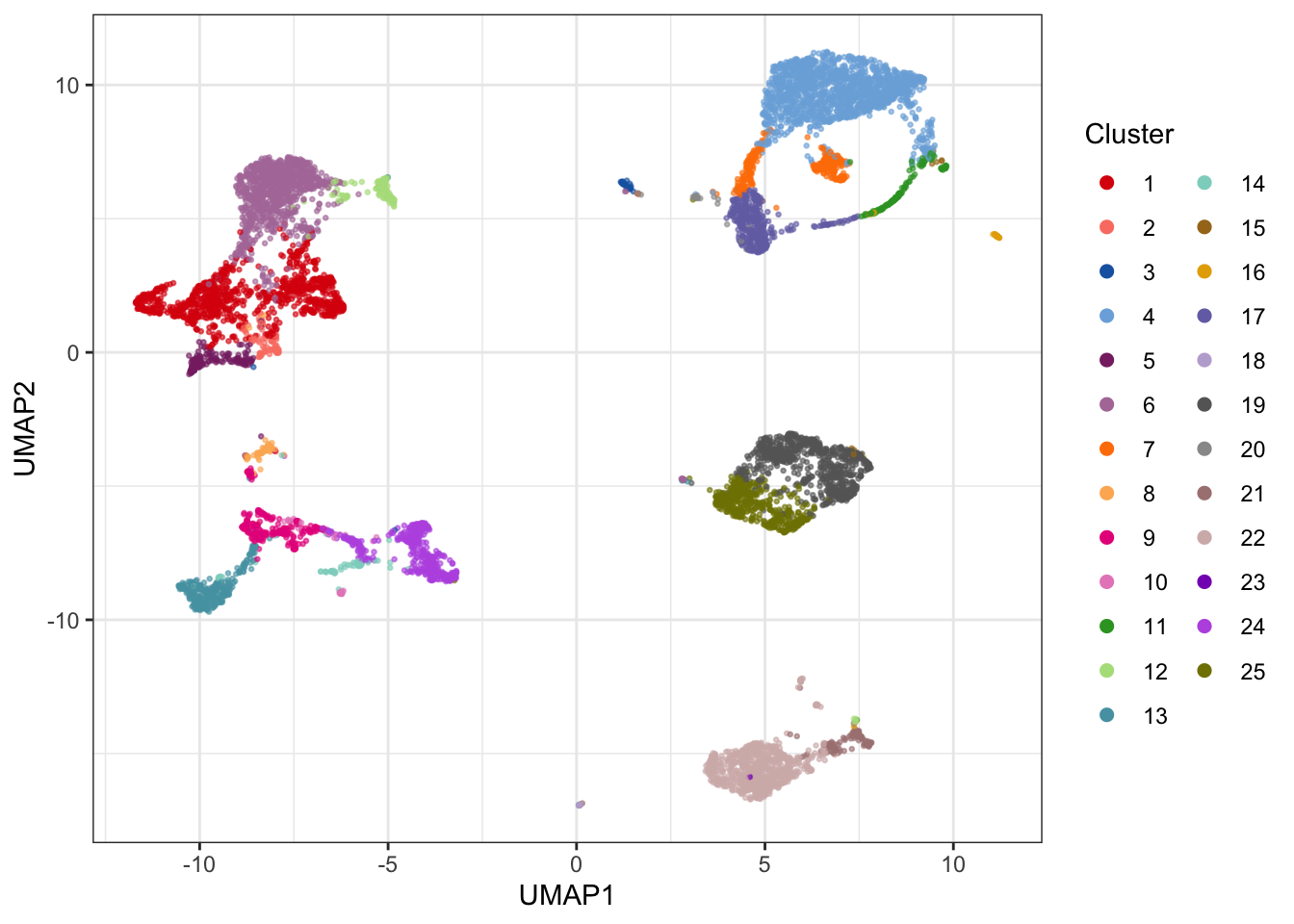

clusters_fs <- cytof_cluster(xdata = live[live$sample==fcs_files[1],lineage_channels], method = "FlowSOM", FlowSOM_k = 25, flowSeed = 462)# Visualize results on UMAP plot (colors from CATALYST)

cols <- CATALYST:::.cluster_cols

plot_df <- cbind.data.frame(umap, clusters_fs); plot_df$clusters_fs <- as.factor(plot_df$clusters_fs); colnames(plot_df)[1:2] <- paste0('UMAP', 1:2)

ggplot2::ggplot(plot_df, aes(x = UMAP1, y = UMAP2)) +

geom_point(aes(color = clusters_fs), alpha = 0.6, size = 0.5) +

guides(colour = guide_legend(override.aes = list(alpha = 1, size = 2), ncol = 2, title = 'Cluster')) +

scale_color_manual(values = cols) +

theme_bw()

4.2.3 VorteX

VorteX is a clustering tool, which is implemented in Java and available as a graphical tool. VorteX includes different clustering algorithms incl. X-shift (non-parametric, density-based), hierarchical clustering, Mean-shift and K-medoids. In VorteX it is also easy to visualize the results or clusterings using different plot types.

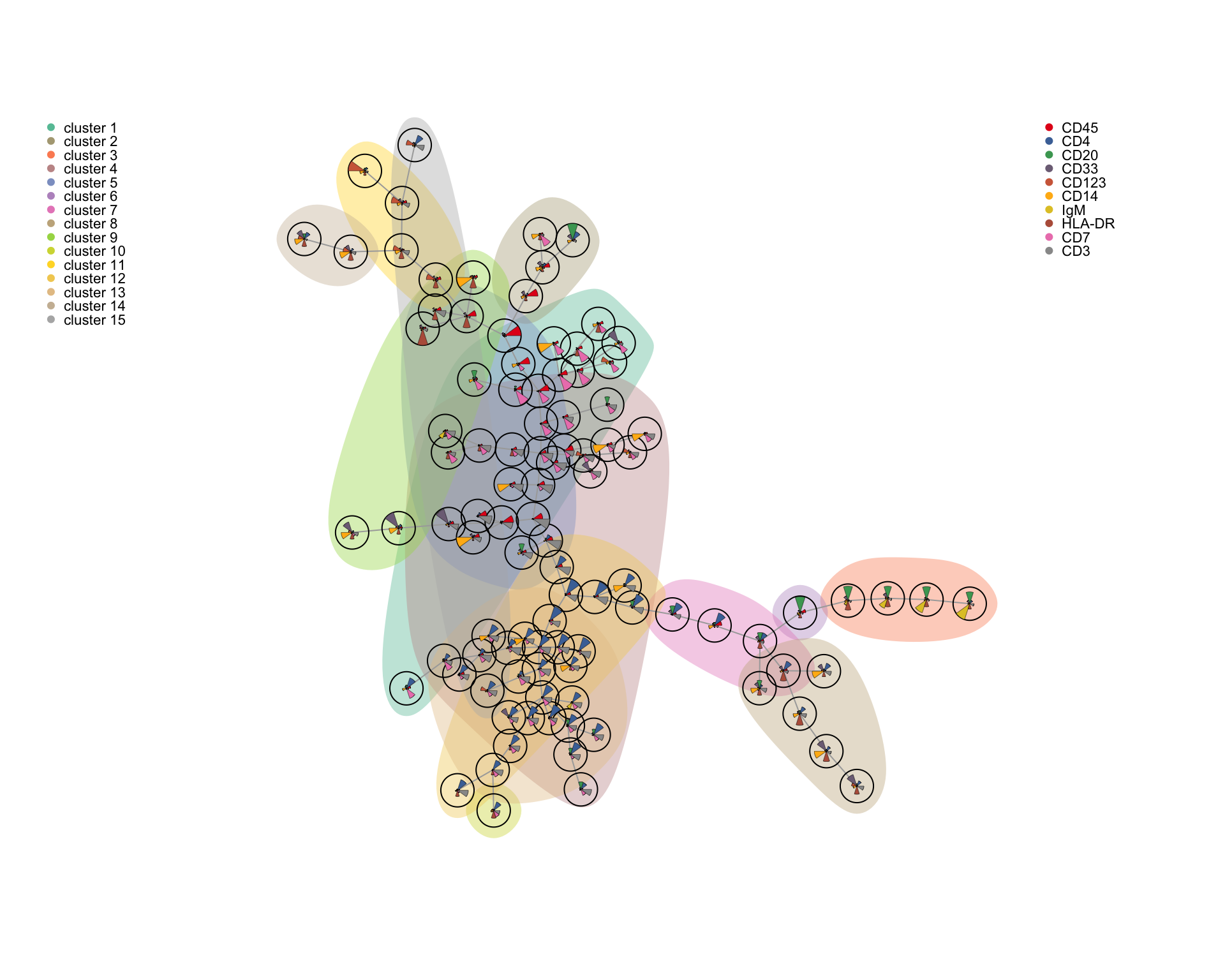

4.2.4 SCAFFoLD

SCAFFoLD (Single-Cell Analysis by Fixed Force- and Landmark-Directed) is, as the name indicates, a tool based on the use of force-directed graphs. First, cells are clustered, and then the clusters are spatialized in the plane. This means that similar clusters are put closer to each other than dissimilar ones. This results in a map. Furthermore, it is possible to highlight landmark populations that have been manually gated (working as references). As a result it is possible to compare a new sample to an old, to look for global structure changes. Samples can be clustered both individually and together.

To use SCAFFoLD, you need a C++ compiler and once that is ready you can run the program as follows:

# Loading required packages

require(devtools) || install.packages("devtools")

devtools::install_github("nolanlab/scaffold")

library(scaffold)

# Running SCAFFoLD (launching a GUI), please note that your current working directory should contain your files of interest before launching the GUI

scaffold.run()A complete use guide for the GUI is found here.

4.3 Visualizing marker expression in different clusters

Once cells have been clustered, examining the expression profiles of the cells in each cluster can be used to provide clues about the type of cells constituting the clusters. This can be done in various ways:

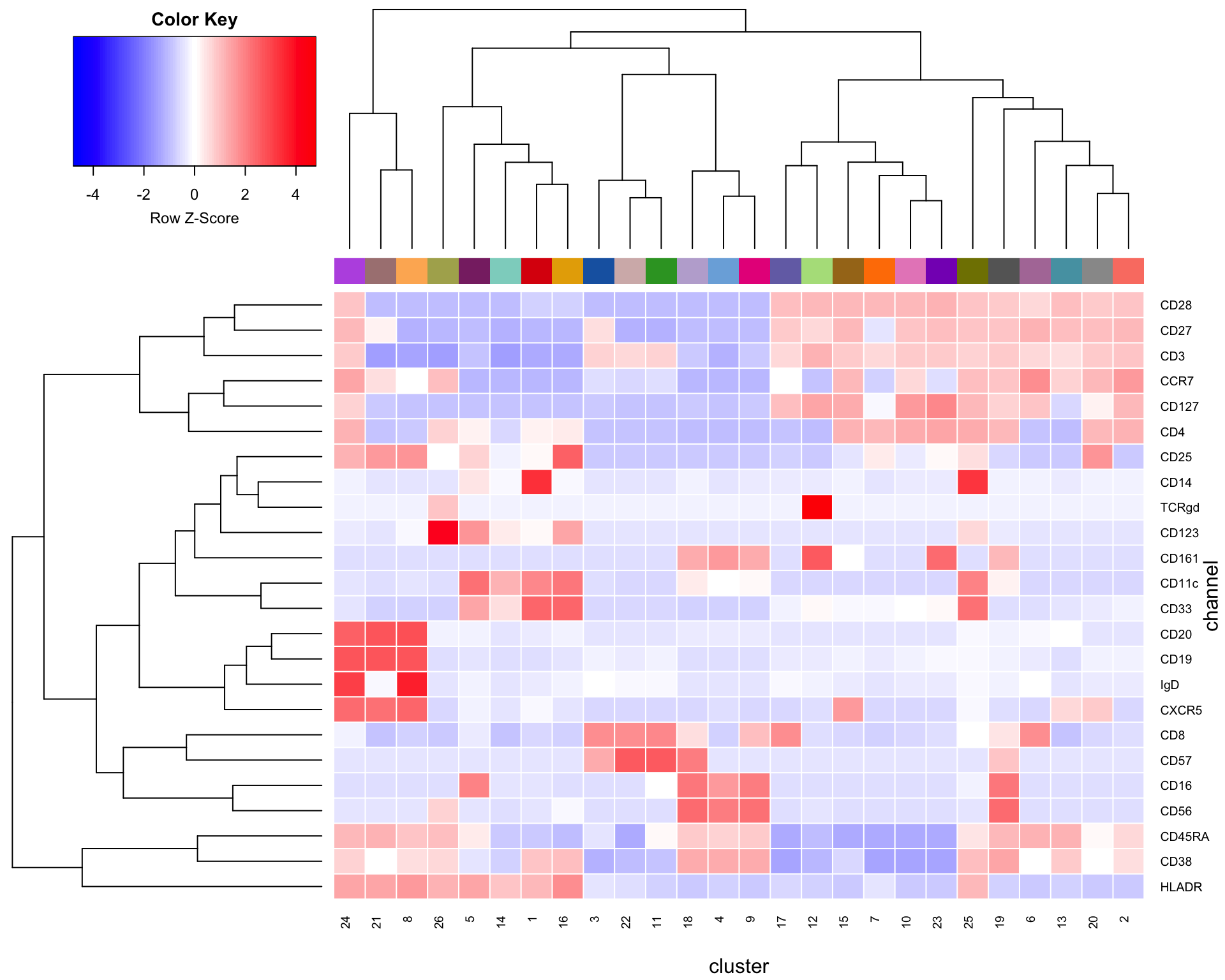

4.3.1 Simple heatmap

The most basic way to visually show the marker profiles of clusters is with a simple heatmap of median marker expressions. This of course has the drawback of ignoring the variance of the marker expression in each cluster, but offers does offer some insights into the composition of each cluster.

library(miscTools)

library(reshape2)

# Extract medians for each marker in each cluster

sub_matrix <- live[live$sample==fcs_files[1],lineage_channels]

cluster_matrix <- NULL

for(i in 1:max(clusters_pg)) {

cluster_matrix <- rbind(cluster_matrix, colMedians(sub_matrix[clusters_pg==i,]))

}

# Make plot

library(gplots)

cols = CATALYST:::.cluster_cols

par(mar = c(2,2,2,2))

heatmap.2(t(cluster_matrix), col=bluered(100), trace="none", density.info = "none", sepcolor = "white", sepwidth = c(0.001, 0.001), colsep=c(1:ncol(t(cluster_matrix))), rowsep=c(1:nrow(t(cluster_matrix))), xlab="cluster", ylab="channel", scale="row", ColSideColors=cols[1:max(clusters_pg)])

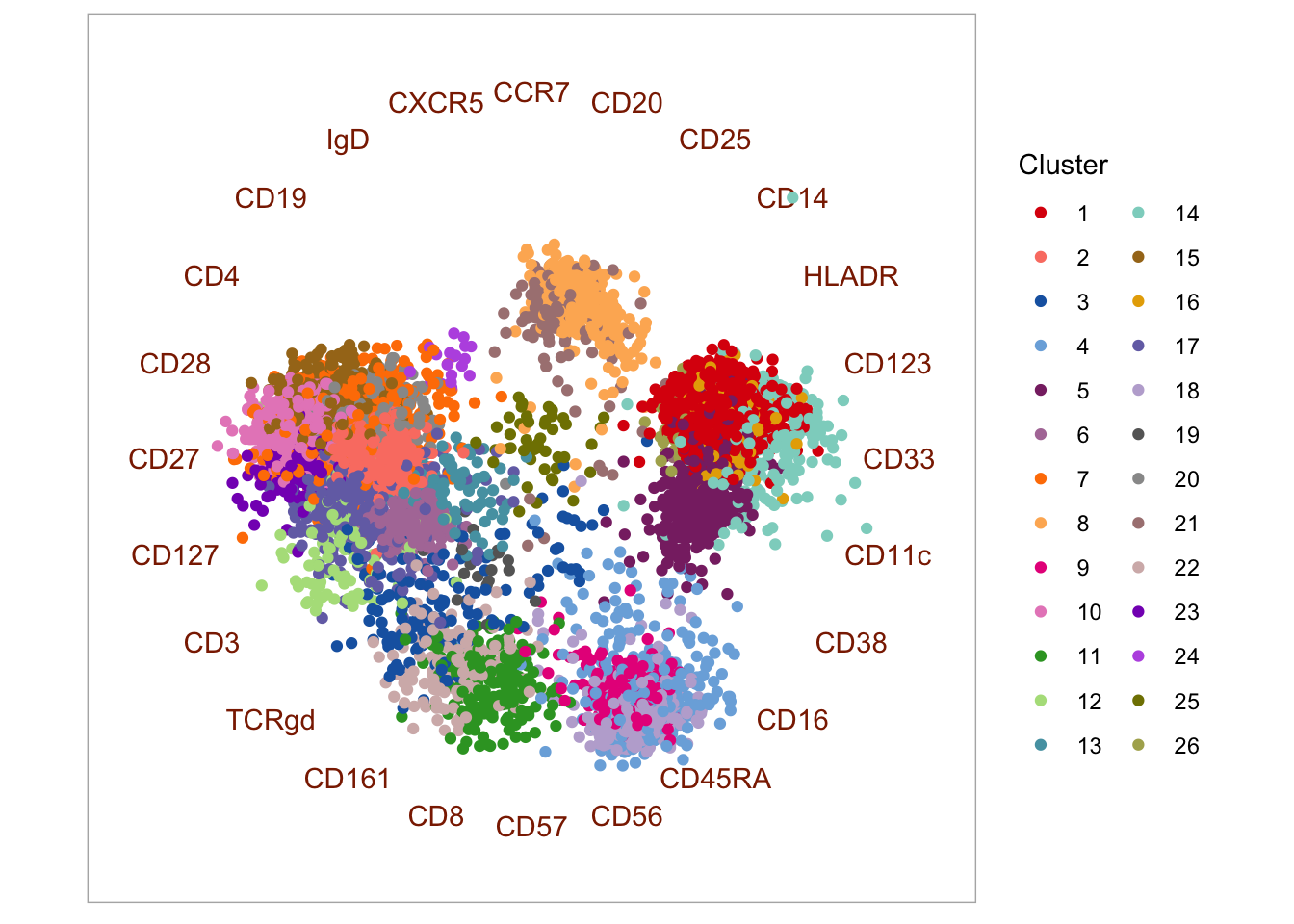

4.3.2 Radviz

Radviz is a visualization scheme that utilizes so-called dimensional anchors. Each anchor (in this case, each marker) is distributed on the circumference of a circle, and ordered in a way that markers whose expression correlate, are located closer to each other. Each event is then projected into the circular and located closer to markers that best describe them. The authors use the following descriptive analogy to explain the algorithm: “In Radviz, each dimension in the dataset is represented by a dimensional anchor, and each dimensional anchor is distributed evenly on a unit circle. Each line in the data set corresponds to a point in the projection, that is linked to every dimensional anchor by a spring. Each spring’s stiffness corresponds to the value for that particular thing in that particular dimension. The position of the point is defined as the point in the 2D space where the spring’s tension is minimum.” Read more here.

library(Radviz)

library(dplyr)

cell.S <- make.S(colnames(sub_matrix))

cell.sim <- cosine(as.matrix(sub_matrix))

set.seed(946)

optim.cell <- do.optimRadviz(cell.S, cell.sim, iter=100, n=1000)

cell.S <- make.S(tail(optim.cell$best,1)[[1]])

cell.rv <- do.radviz(as.matrix(sub_matrix),cell.S)

# Add cluster information

cell.rv$proj$data$Cluster <- as.factor(clusters_pg)

# Make plot using nice CATALYST colors

cols = CATALYST:::.cluster_cols

plot(cell.rv) +

geom_point(aes(color = Cluster)) +

scale_color_manual(values = cols)

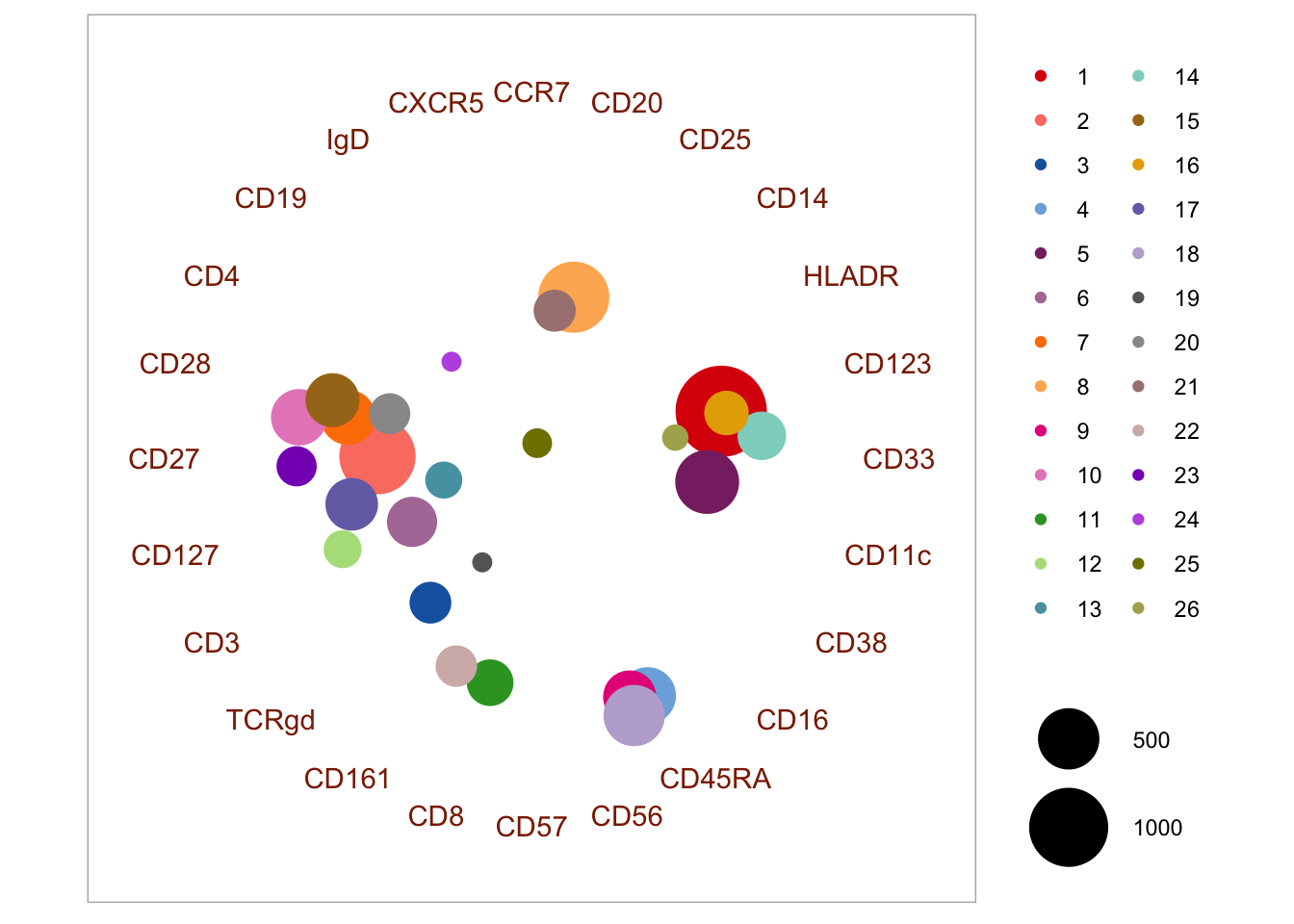

Clusters can be visualized more clearly in a “bubble plot” version of Radviz. In this plot, events are collapsed to the coordinates of their respective cluster centroid, where the size of the “bubble” corresponds to the size of the cluster.

p <- bubbleRadviz(cell.rv, group = "Cluster")

# Manual fix for order in legend and color of bubbles to match the plot above

levels(p$layers[[1]]$data$Cluster) <- as.character(1:max(clusters_pg)); p$layers[[1]]$data$Cluster[1:max(clusters_pg)] <- 1:max(clusters_pg)

# Make the plot

p + scale_color_manual(values = cols)

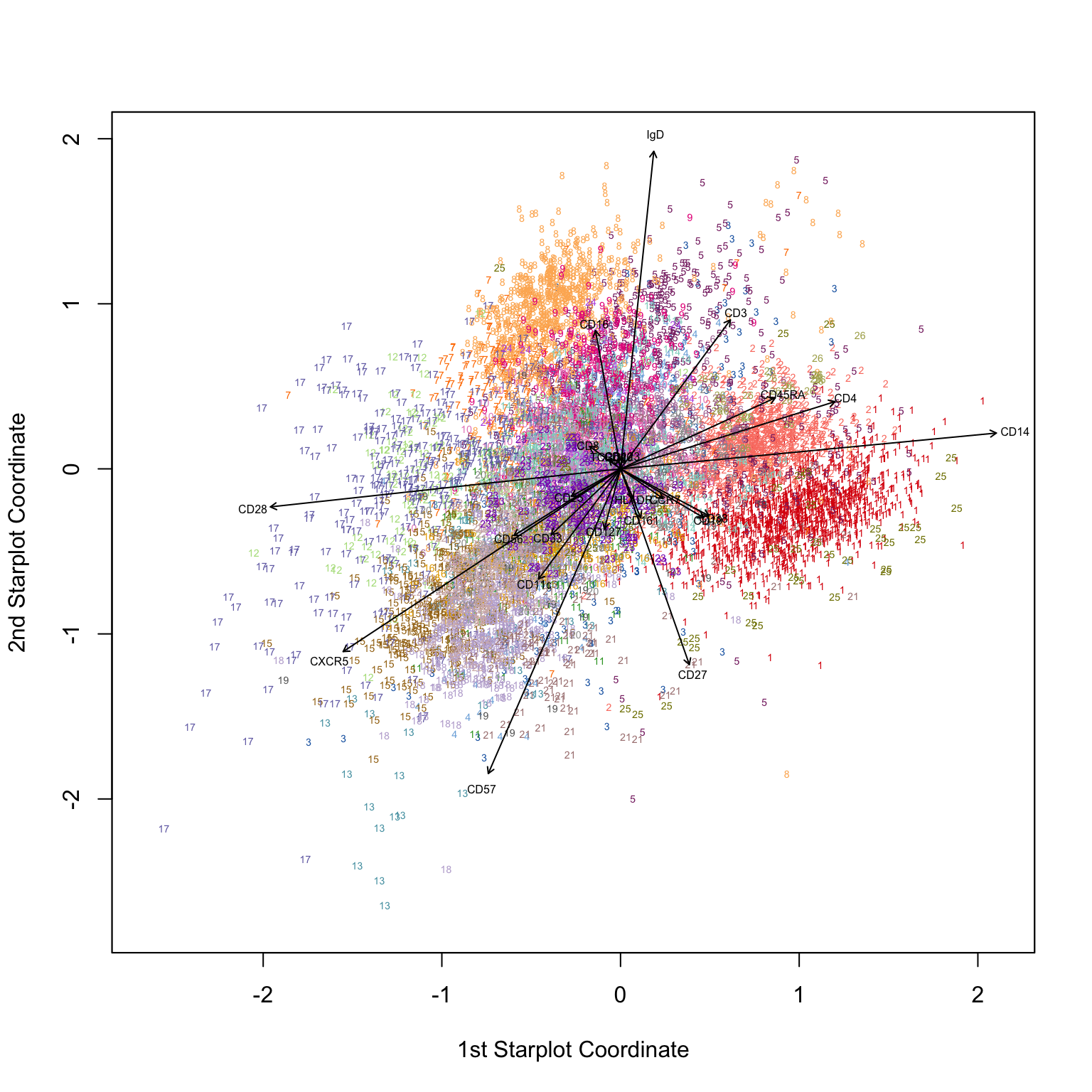

4.3.3 Penalized supervised star plot

The penalized supervised star method was presented by Holmes et al. (2019). It is a way that may be used to visualize marker expression in clusters in a 2D plot - similarly to the Radviz approach. The function is available here along with a description of the input arguments. The markers with the longest arrows in the visualization may be interpreted as those driving most of the clustering. Part of the output is also an importance value (r) per marker, which is based on the ratio of inter-cluster and intra-cluster variance. There are a few parameters to set in the function, including \(\lambda_{1}\) and \(\lambda_{2}\). One can set multiple of these in the call and it will automatically run a grid search to optimize visualization.

library(matrixcalc)

library(plotrix)

library(JPEN)

library(VCA)

# Define some colors to use

cols = CATALYST:::.cluster_cols

# First, we make a dataframe with the data. For PSS, the input is a expression dataframe in which

# the first column containes integer cluster IDs (1, 2, .., n) without any gaps in the integer set.

pss_matrix <- cbind.data.frame(clusters_pg, live[live$sample==fcs_files[1],lineage_channels])

# Make the plot

pss <- SupStarPlots(DF = pss_matrix, lambda1 = seq(0.001, 20.001, 10), lambda2 = seq(0.001, 20.001, 10),

ColOpt = cols, M = 1.0)## Marker r

## 18 CD28 22.283594

## 8 CD3 15.386340

## 15 CD20 13.679707

## 5 IgD 10.183680

## 2 CD19 9.780844

## 3 CD4 9.716805

## 6 CD11c 9.512544

## 23 HLADR 8.896232

## 17 CD33 8.671530

## 12 CXCR5 7.857189

## 7 CD16 7.807980

## 10 CD27 6.706412

## 11 CD14 6.386395

## 22 CD56 6.091298

## 1 CD57 4.560445

## 4 CD8 4.534566

## 13 CCR7 3.705037

## 16 CD127 3.608410

## 14 CD45RA 3.247039

## 21 CD123 2.085785

## 9 CD38 1.765027

## 19 CD161 1.344447

## 24 CD25 1.014503

## 20 TCRgd 0.244795

4.4 Analysis of differential abundance of cell populations and proteins

Once subpopulations are clustered in each sample, the next step will often be to elucidate associations between cluster frequencies and/or protein expression and a given condition or time. These exercises closely resemble differential expression analyses used in transcriptomics for decades, and the bioinformatics toolbox contains multiple tools for basic significance tests and linear models. In addition, the GUI tools Citrus and Statistical Scaffold were developed for this purpose.

Citrus (cluster identification, characterization, and regression) (Bruggner et al. 2014) works by combining hierarchical clustering of events in all samples aggregated into one. Then, descriptive metrics for each cluster are calculated, including percentage of cells assigned to each cluster, and the median expression of lineage markers in each cluster. These data are then used in regularized classification models to elucidate factors differing across conditions.

The Statistical Scaffold method (Spitzer et al. 2017) works in a similar way. First, populations of interest are separated by manual gating in a representative sample. These populations will serve as landmark nodes on a scaffold map, which serves as a template for mapping events in the remaining samples using the cosine similarity between the vectors of median marker values of each cluster. Lastly, the Significance Across Microarrays permutation test (Tusher et al. 2001) is applied to test for differences in population frequencies across conditions.

One major shortcoming of existing tools is the inability to handle more complex experimental setups, such as longitudinal studies, or studies with multiple factors and covariates. Generalized linear models (GLM) for differential expression analysis, popularized for transcriptomic analyses by tools such as limma (Ritchie et al. 2015) for microarray data and DEseq2 (Love et al. 2014) or EdgeR (Robinson et al. 2010) for RNA sequencing data, accepts model matrices with co-variates for complex experimental designs. Recently, Lun and colleagues leveraged GLMs from the EdgeR software specifically for mass cytometry data and showed these models outperform Citrus on legacy data (Lun et al. 2017).

Lastly, it is important to consider that the immune system consists of multiple functional cell populations, which are coordinated in a complex interplay in response to various conditions. It is therefore sometimes more informative to examine population frequencies as dependent variables by examining correlations or ratios between frequencies (Bailur et al. 2017). Additionally, natural variations in the immune system are likely to mask the difference conferred by time or conditions when looking at a single variable, even if analyzing a large number of samples. Much like transcriptomics analyses, the immune system does not consist of independent variables, and neither the abundance of protein markers on individual cells nor the frequencies of individual cell populations can be assumed independent from the remaining variables.

4.4.1 Analysis of differential abundance of cells in clusters

The cell counts in each cluster is the easiest to compare, as this roughly follows a negative binomial distribution and can be flexibly modeled using a generalized linear model (GLM) as implemented in, for example, the edgeR package for RNA-seq transcriptomics data. In this example, we will use the data generated by Bodenmiller et al. and identify clusters with a differential abundance of cells between conditions.

First, we import the data and create a dataframe with all the samples:

# Import data as Summarized Experiment

library(HDCytoData)

data_bcrxl <- Bodenmiller_BCR_XL_SE()

exprs_set <- cbind.data.frame(data_bcrxl@assays$data$exprs, rowData(data_bcrxl)[,1:3])

# Asinh-transform the measured markers

exprs_set[,which(colData(data_bcrxl)$marker_class != 'none')] <- asinh(exprs_set[,which(colData(data_bcrxl)$marker_class != 'none')]/5)

# Set channels

pregating_channels <- c("DNA-1", "DNA-2")

lineage_channels <- c("CD45", "CD4", "CD20", "CD33", "CD123", "CD14", "IgM", "HLA-DR", "CD7", "CD3")

functional_channels <- c("pNFkB", "pp38", "pStat5", "pAkt", "pStat1", "pSHP2", "pZap70", "pStat3", "pSlp76", "pBtk", "pPlcg2", "pErk", "pLat", "pS6")

instrument_channels <- c("Cell_length", "Time", "BC1", "BC2", "BC3", "BC4", "BC5", "BC6", "BC7")In the interest of speed, we here down sample to 1000 random events from each sample. Remember to normalize to the total number of events in each sample if the full samples are used. We then cluster using the cytofkit2 implementation of Phenograph and create a table with cell counts in each cluster for each sample.

# Sample 1000 random events form each sample

sub <- NULL

for(i in unique(exprs_set$sample_id)) {

temp <- exprs_set[exprs_set$sample_id==i,]

set.seed(46)

sub <- rbind(sub, temp[sample(nrow(temp), 1000, replace=FALSE),lineage_channels])

}

sample <- rep(unique(exprs_set$sample_id), each=1000)

# cluster

library(cytofkit2)

clusters_pg <- cytof_cluster(xdata = sub, method = "Rphenograph")

# make count table - here, you should convert to percentage if samples are of uneven sizes

cell_counts <- table(clusters_pg, sample)

conditions <- data_bcrxl@metadata$experiment_info$group_id

patients <- data_bcrxl@metadata$experiment_info$patient_idLastly, we evaluate differential abundance in each cluster using a NB-GLM from the edgeR package:

# calculate differential abundance accounting for paired design

library(edgeR)

dge <- DGEList(cell_counts, lib.size=rep(1000,16))

design <- model.matrix(~patients + conditions)

y <- estimateDisp(dge, design)

fit <- glmQLFit(y, design, robust=TRUE)

res <- glmQLFTest(fit, coef = 'conditionsBCR-XL')

topTags(res)## Coefficient: conditionsBCR-XL

## logFC logCPM F PValue FDR

## 11 -2.323159 15.72490 129.57413 1.323060e-20 2.646119e-19

## 15 -2.485496 16.04217 98.34914 3.747068e-17 3.747068e-16

## 9 1.907293 15.64828 72.32328 7.399927e-14 4.933284e-13

## 6 2.635548 15.35019 54.79099 2.325811e-11 1.162906e-10

## 19 -1.583595 15.75119 60.69782 7.169961e-11 2.867985e-10

## 16 -1.997757 16.35019 44.62465 2.661378e-09 8.871261e-09

## 8 1.724658 14.90839 34.93690 3.506403e-08 1.001830e-07

## 20 1.285307 14.87590 18.89864 2.978042e-05 7.445104e-05

## 10 1.048916 16.09188 15.59336 1.352719e-04 2.729150e-04

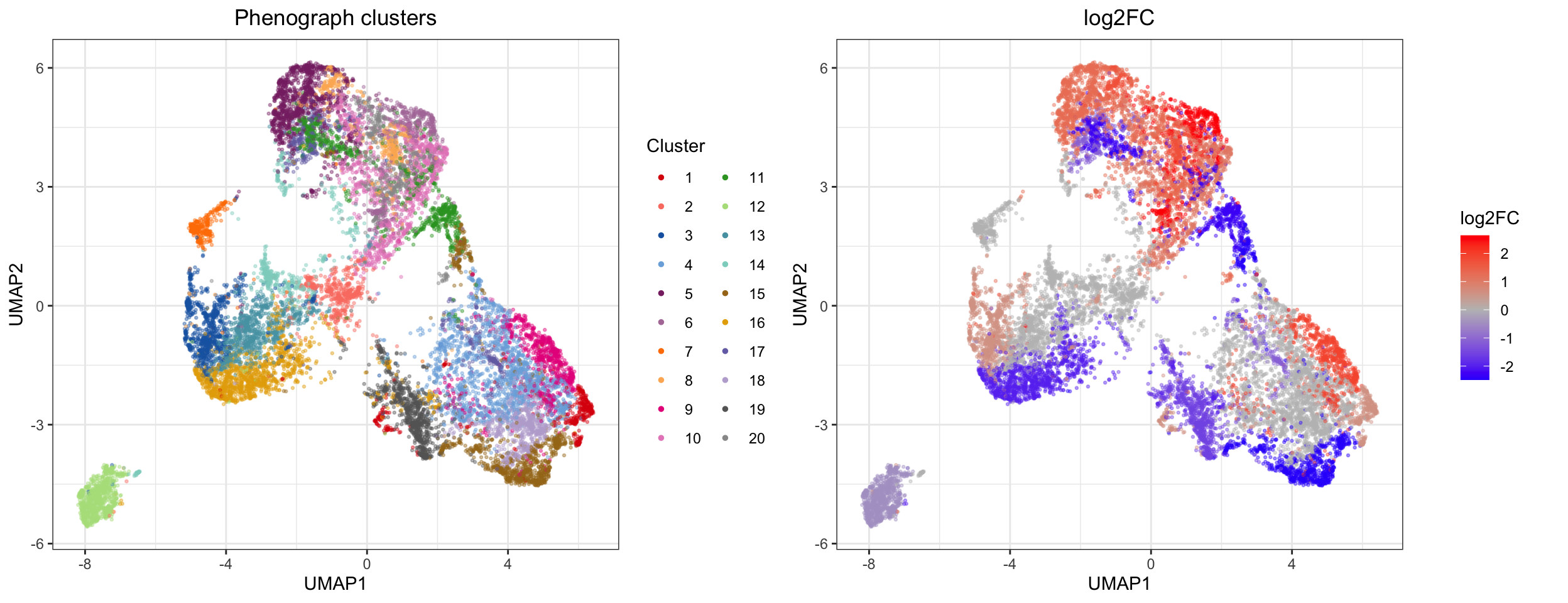

## 5 1.284918 16.17677 15.57463 1.364575e-04 2.729150e-04We can now visualize the log2 fold change in clusters with a significant differential abundance between the two conditions:

# visualize on UMAP

library(uwot)

library(ggplot2)

library(cowplot)

library(RColorBrewer)

# Calculate umap coordinates

set.seed(743)

umap <- umap(sub)

# Make a plotting data frame

plot_df <- cbind.data.frame(umap, as.factor(clusters_pg), res$table$logFC[clusters_pg], p.adjust(res$table$PValue, method = 'BH')[clusters_pg], rep(conditions, each = 1000)); colnames(plot_df) <- c(paste0('UMAP', 1:2), 'Cluster', 'log2FC', 'fdr', 'Group')

# Adjust to only color significant p-values after FDR corection

plot_df$log2FC[plot_df$fdr > 0.05] <- 0

# Get some nice colors

cols <- CATALYST:::.cluster_cols

# Generate the plots

p1 <- ggplot2::ggplot(plot_df, aes(x = UMAP1, y = UMAP2)) +

geom_point(aes(color = Cluster), alpha = 0.4, size = 0.5) +

scale_color_manual(values = cols) +

guides(colour = guide_legend(override.aes = list(alpha = 1, size = 1), ncol = 2)) +

ggtitle('Phenograph clusters') + theme_bw() +

theme(plot.title = element_text(hjust = 0.5))

p2 <- ggplot2::ggplot(plot_df, aes(x = UMAP1, y = UMAP2)) +

geom_point(aes(color = log2FC), alpha = 0.4, size = 0.5) +

scale_colour_gradient2(low = "blue", mid = "grey", high = "red") +

ggtitle('log2FC') + theme_bw() +

theme(plot.title = element_text(hjust = 0.5))

plot_grid(p1, p2, align = 'v')

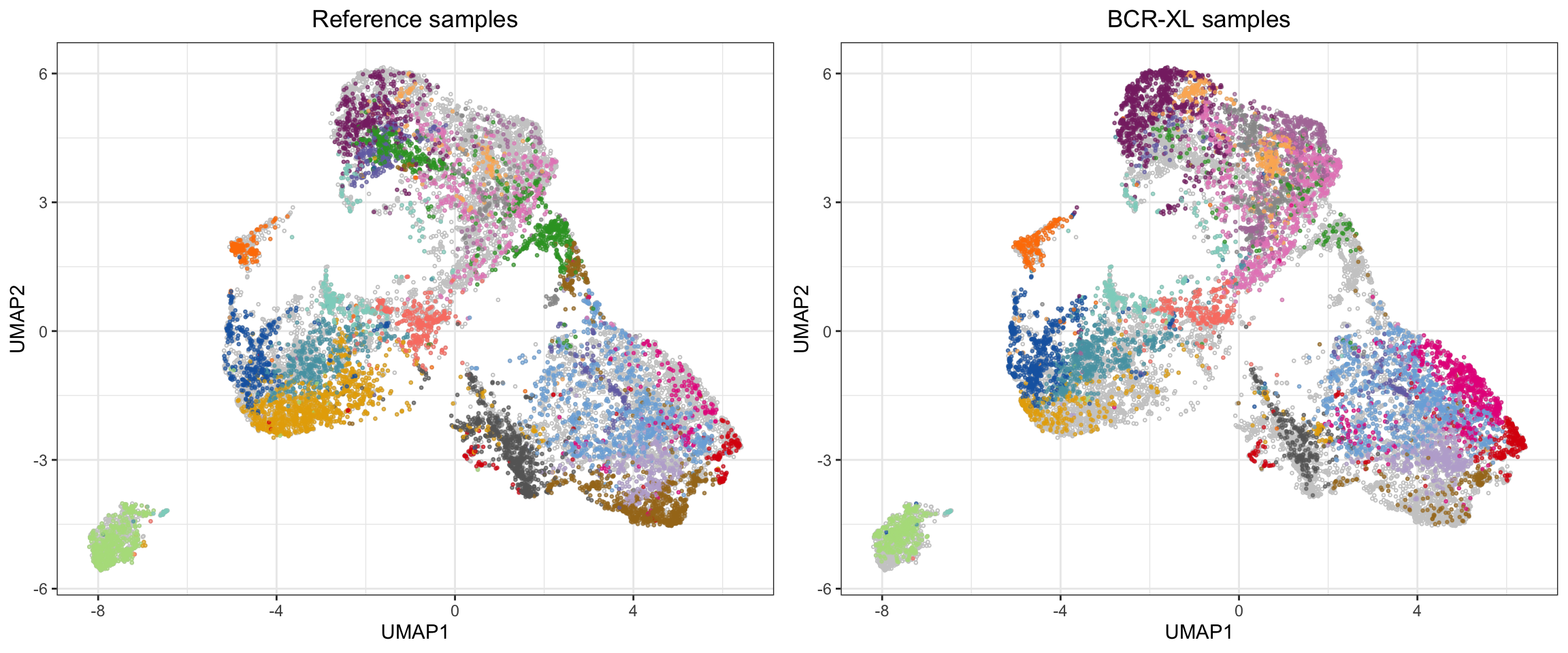

We can also visually show the cell abundance difference between clusters using the approach in clustering section:

# Plot each sample on UMAP canvas consisting of all events

p <- list()

for (s in 1:2) {

p[[s]] <- ggplot2::ggplot(plot_df[plot_df$Group==levels(plot_df$Group)[s],], aes(x = UMAP1, y = UMAP2)) +

geom_point(data = plot_df, pch = 21, color = 'grey80', size = 0.5) +

geom_point(aes(color = Cluster), alpha = 0.6, size = 0.5, show.legend = F) +

scale_color_manual(values = cols) +

ggtitle(paste(levels(plot_df$Group)[s], "samples")) +

theme_bw() +

theme(plot.title = element_text(hjust = 0.5))

}

plot_grid(plotlist = p)

4.4.1.1 cydar

The cydar tool works in much the same way as above - clustering followed by statistical testing for differential abundance of cells in each cluster. In this method, the clustering is done by creating hyperspherical clusters. This partitioning is similar to that of k-means clustering, but here a constant distance measure (the radius) is used to define the hyperspheres rather than dividing the events into Voronoi cells based on the nearest mean. This means that rather than getting a (somewhat) interpretable cluster structure (where each cluster can be labeled as a canonical cell population), hyperspherical partitioning is somewhat abstract and not directly interpretable. Another feature of the hypersphere partitioning is allowing multi-membership (which one can argue makes more biological sense than strictly allowing cells to belong to one cluster), but this again makes the partitioning harder to interpret in 2 or 3 dimensional space. Although harder to directly interpret, choosing a k that is greater than “reality”, is certainly better than selecting a k that is too small: “under-clustering” a highly heterogeneous cell mixture such as PBMC, will potentially result in comparing biologically irrelevant subsets. However, the trade-off of erring on the safe side is that in order for the cydar output to be biologically interpretable, some post-processing is necessary. The authors provide an excellent discussion of this in their paper and in their R package vignette

Now for some code!

library(cydar)

library(ncdfFlow)

# Sample 1000 random events form each sample - cydar takes data as a list of matrices

sub <- list()

for(i in unique(exprs_set$sample)) {

temp <- exprs_set[exprs_set$sample==i,]

set.seed(23)

sub[[i]] <- temp[sample(nrow(temp), 1000, replace=FALSE),lineage_channels]

}

conditions <- data_bcrxl@metadata$experiment_info$group_id

patient.id <- data_bcrxl@metadata$experiment_info$patient_id

sample.id <- rep(data_bcrxl@metadata$experiment_info$sample_id, each = 1000)

# Count the cells into hyperspheres with a radius equal to 0.5 times the square root of the number of markers

set.seed(12)

cd_all <- prepareCellData(sub)

cd_all <- countCells(cd_all, tol=0.5)

# Testing for significant differences in abundance - accounting for paired design

library(edgeR)

y <- DGEList(assay(cd_all), lib.size=cd_all$totals)

keep <- aveLogCPM(y) >= aveLogCPM(5, mean(cd_all$totals))

cd <- cd_all[keep,]

y <- y[keep,]

design <- model.matrix(~patient.id+conditions)

y <- estimateDisp(y, design)

fit <- glmQLFit(y, design, robust=TRUE)

res <- glmQLFTest(fit)

# Controlling the spatial FDR

qvals <- spatialFDR(intensities(cd), res$table$PValue)

is.sig <- qvals <= 0.05In this case we find that 325 out of 551 hyperspheres contain a significantly different number of cells between our two conditions.

# Print the number of hypersphere with significantly different cell abundance between case and control

summary(is.sig)## Mode FALSE TRUE

## logical 226 3254.4.2 Analysis of differential expression of proteins between clusters

The expression of lineage markers between different clusters will naturally differ, as differential expression is the very basis for all the clustering algorithms discussed here. However, it may be of interest to examine whether the expression of functional markers differs within a cluster that is considered to represent the same cell type in two different conditions.

Currently, the most widely utilized approach is to compare the median expression a given protein in each sample across conditions for a specific cluster. There are some potentially serious caveats to this approach which we will discuss a bit further down. But first, let’s go through a simple example of this common approach:

# Sample 1000 random events form each sample

sub <- NULL

for(i in unique(exprs_set$sample)) {

temp <- exprs_set[exprs_set$sample==i,]

set.seed(84)

sub <- rbind(sub, temp[sample(nrow(temp), 1000, replace=FALSE),c(lineage_channels, functional_channels)])

}

sample <- rep(unique(exprs_set$sample), each=1000)

# cluster

library(cytofkit2)

clusters_pg <- cytof_cluster(xdata = sub[,lineage_channels], method = "Rphenograph")

# Make median expression table (samples x functional proteins) for each cluster

# - here we generate the table for cluster 1

sub_cluster <- sub[clusters_pg==1,functional_channels]

sample_cluster <- sample[clusters_pg==1]

exprs_cluster <- NULL

for(i in unique(sample_cluster)) {

exprs_cluster <- cbind(exprs_cluster, miscTools::colMedians(sub_cluster[sample_cluster==i,]))

}

colnames(exprs_cluster) <- unique(sample_cluster)Again we are left with a simple expression matrix, to which you can apply your favorite statistics. Here we use a linear model:

# Basic DE test using limma

library(limma)

conditions_test <- factor(gsub(".*(Reference|BCR-XL).*", "\\1", colnames(exprs_cluster)))

patient_test <- factor(gsub(".*patient(.)_.*", "\\1", colnames(exprs_cluster)))

design <- model.matrix(~patient_test+conditions_test)

fit <- lmFit(exprs_cluster, design)

fit <- eBayes(fit)

topTable(fit, coef="conditions_testReference", number="inf", adjust.method="bonferroni")## logFC AveExpr t P.Value adj.P.Val B

## pNFkB 1.122351516 1.522762047 6.9921970 0.0001118117 0.001565364 1.6820193

## pBtk 0.291127735 0.094915744 6.0447849 0.0003038642 0.004254099 0.6457291

## pAkt -0.434887239 2.042865026 -4.1624987 0.0031326863 0.043857608 -1.7925238

## pSlp76 0.190229055 0.024751506 3.8081627 0.0051459466 0.072043252 -2.3095335

## pp38 0.720171096 0.301162316 3.1650575 0.0132397265 0.185356171 -3.2857430

## pLat -0.228809018 0.264863056 -2.8417041 0.0216854614 0.303596460 -3.7886220

## pStat1 -0.420684203 0.991877897 -2.7574389 0.0246986828 0.345781559 -3.9201382

## pStat3 -0.132816457 0.083995254 -2.1470853 0.0639592407 0.895429370 -4.8624590

## pErk -0.241943128 0.174066127 -1.7215685 0.1233329347 1.000000000 -5.4829087

## pS6 -0.069115380 -0.008251051 -1.6690707 0.1335363558 1.000000000 -5.5555782

## pPlcg2 -0.193210279 0.249821843 -1.6260164 0.1424801841 1.000000000 -5.6143702

## pZap70 -0.032194520 -0.057617285 -1.6137051 0.1451368594 1.000000000 -5.6310419

## pSHP2 -0.022827538 -0.066722694 -1.5205028 0.1667571584 1.000000000 -5.7551120

## pStat5 0.006800125 -0.062395905 0.4910166 0.6365596727 1.000000000 -6.7519840So in cluster 1, we have three significantly differentially expressed proteins between the Reference and BCR-XL samples, based on the median expression of the protein.

The most glaring (potential) issue with using the median expression is that we assume homoscedasticity and ignore the variance of the expression within each sample. Another potential issue is that for the linear models, we assume that the expression values (and median values) follow a Gaussian distribution. As mentioned earlier, the arcsinh transformation is first and foremost a cosmetic operation for biaxial plots, and the raw counts (without the default randomization by the Fluidigm software) roughly follows a (somewhat zero-inflated) negative binomial distribution. As such, a more appropriate statistic may be adopted from common practices in single cell transcriptomics analyses, but the error model has to be adapted: for example, dropout events are not an issue in mass cytometry, but the isotope counts are subject to stochastic noise and antibody binding introduces some fairly systematic noise (as discussed in our manuscript) that should be modeled appropriately. Lastly, we measure far fewer features in mass cytometry, but vastly more observations compared with single cell transcriptomics. Until such a model is formulated, linear modeling of median marker expression remains the state-of-the-art.

4.4.3 Citrus